Difference between revisions of "Directory:Jon Awbrey/Papers/Futures Of Logical Graphs"

Jon Awbrey (talk | contribs) (→Introduction: TeX formats) |

Jon Awbrey (talk | contribs) (→Categories of structured individuals: waybak links) |

||

| (47 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:Futures Of Logical Graphs}} | {{DISPLAYTITLE:Futures Of Logical Graphs}} | ||

| − | This article develops an extension of [[Charles Sanders Peirce]]'s [[Logical | + | '''Author: [[User:Jon Awbrey|Jon Awbrey]]''' |

| + | |||

| + | This article develops an extension of [[Charles Sanders Peirce]]'s [[Logical Graphs]]. | ||

==Introduction== | ==Introduction== | ||

| Line 6: | Line 8: | ||

I think I am finally ready to speculate on the futures of logical graphs that will be able to rise to the challenge of embodying the fundamental logical insights of Peirce. | I think I am finally ready to speculate on the futures of logical graphs that will be able to rise to the challenge of embodying the fundamental logical insights of Peirce. | ||

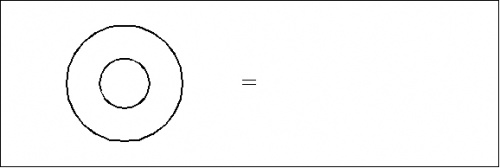

| − | For the sake of those who may be unfamiliar with it, let us first divert ourselves with an exposition of a standard way that graphs of the order that Peirce considered, those embedded in a continuous manifold like a plane sheet of paper, without or without the paper bridges that Peirce used to augment his genus, can be represented as parse-strings in | + | For the sake of those who may be unfamiliar with it, let us first divert ourselves with an exposition of a standard way that graphs of the order that Peirce considered, those embedded in a continuous manifold like a plane sheet of paper, without or without the paper bridges that Peirce used to augment his genus, can be represented as parse-strings in Ascii and sculpted into pointer-structures in computer memory. |

A blank sheet of paper can be represented as a blank space in a line of text, but that way of doing it tends to be confusing unless the logical expression under consideration is set off in a separate display. | A blank sheet of paper can be represented as a blank space in a line of text, but that way of doing it tends to be confusing unless the logical expression under consideration is set off in a separate display. | ||

| Line 13: | Line 15: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_3_Visible_Frame.jpg|500px]] |

|} | |} | ||

| − | This can be written inline as <math>{}^{\backprime\backprime} \texttt{(~(~)~)} = \quad {}^{\prime\prime}</math> or set off in a text display: | + | This can be written inline as <math>{}^{\backprime\backprime} \texttt{(} ~ \texttt{(} ~ \texttt{)} ~ \texttt{)} = \quad {}^{\prime\prime}~\!</math> or set off in a text display: |

| − | {| align="center" | + | {| align="center" cellspacing="10" |

| − | | width="33%" | <math>\texttt{(~(~)~)}</math> | + | | width="33%" | <math>\texttt{(} ~ \texttt{(} ~ \texttt{)} ~ \texttt{)}~\!</math> |

| − | | width="34%" | <math>=\!</math> | + | | width="34%" | <math>=~\!</math> |

| width="33%" | | | width="33%" | | ||

|} | |} | ||

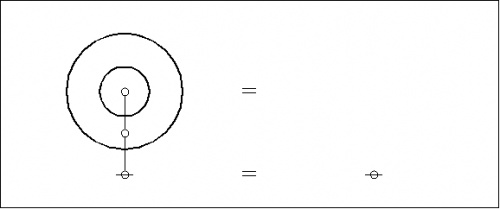

| − | When we turn to representing the corresponding expressions in computer memory, where they can be manipulated with utmost facility, we begin by transforming the planar graphs into their topological duals. | + | When we turn to representing the corresponding expressions in computer memory, where they can be manipulated with utmost facility, we begin by transforming the planar graphs into their topological duals. The planar regions of the original graph correspond to nodes (or points) of the dual graph, and the boundaries between planar regions in the original graph correspond to edges (or lines) between the nodes of the dual graph. |

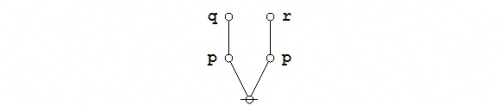

For example, overlaying the corresponding dual graphs on the plane-embedded graphs shown above, we get the following composite picture: | For example, overlaying the corresponding dual graphs on the plane-embedded graphs shown above, we get the following composite picture: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_4_Visible_Frame.jpg|500px]] |

|} | |} | ||

| − | The outermost region of the plane-embedded graph is singled out for special consideration and the corresponding node of the dual graph is referred to as its ''root node''. | + | The outermost region of the plane-embedded graph is singled out for special consideration and the corresponding node of the dual graph is referred to as its ''root node''. By way of graphical convention in the present text, the root node is indicated by means of a horizontal strike-through. |

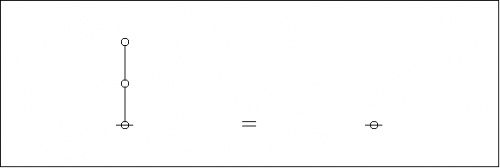

Extracting the dual graph from its composite matrix, we get this picture: | Extracting the dual graph from its composite matrix, we get this picture: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_5_Visible_Frame.jpg|500px]] |

|} | |} | ||

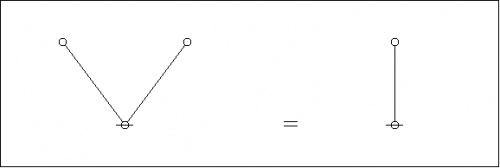

It is easy to see the relationship between the parenthetical expressions of Peirce's logical graphs, that somewhat clippedly picture the ordered containments of their formal contents, and the associated dual graphs, that constitute the species of rooted trees here to be described. | It is easy to see the relationship between the parenthetical expressions of Peirce's logical graphs, that somewhat clippedly picture the ordered containments of their formal contents, and the associated dual graphs, that constitute the species of rooted trees here to be described. | ||

| − | In the case of our last example, a moment's contemplation of the following picture will lead us to see that we can get the corresponding parenthesis string by starting at the root of the tree, climbing up the left side of the tree until we reach the top, then climbing back down the right side of the tree until we return to the root, all the while reading off the symbols, in this case either <math>{}^{\backprime\backprime} \texttt{(} {}^{\prime\prime}</math> or <math>{}^{\backprime\backprime} \texttt{)} {}^{\prime\prime},</math> that we happen to encounter in our travels. | + | In the case of our last example, a moment's contemplation of the following picture will lead us to see that we can get the corresponding parenthesis string by starting at the root of the tree, climbing up the left side of the tree until we reach the top, then climbing back down the right side of the tree until we return to the root, all the while reading off the symbols, in this case either <math>{}^{\backprime\backprime} \texttt{(} {}^{\prime\prime}~\!</math> or <math>{}^{\backprime\backprime} \texttt{)} {}^{\prime\prime},~\!</math> that we happen to encounter in our travels. |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_6_Visible_Frame.jpg|500px]] |

|} | |} | ||

| − | This ritual is called ''traversing'' the tree, and the string read off is often called the ''traversal string'' of the tree. | + | This ritual is called ''traversing'' the tree, and the string read off is often called the ''traversal string'' of the tree. The reverse ritual, that passes from the string to the tree, is called ''parsing'' the string, and the tree constructed is often called the ''parse graph'' of the string. I tend to be a bit loose in this language, often using ''parse string'' to mean the string that gets parsed into the associated graph. |

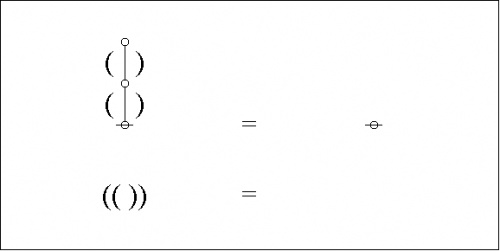

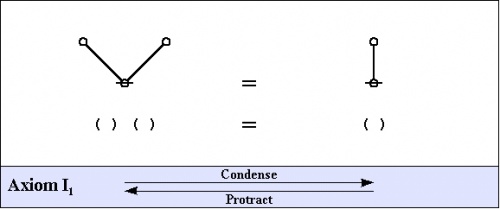

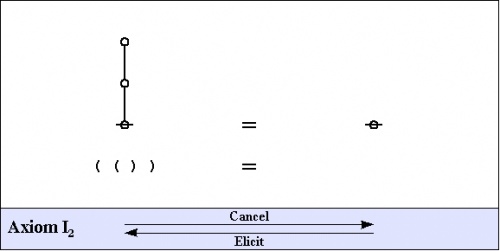

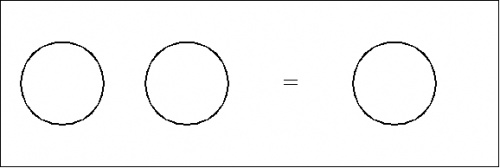

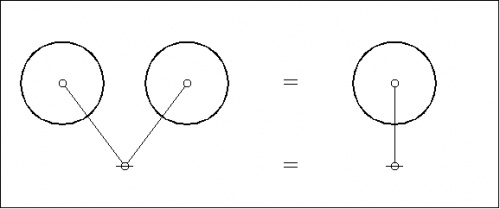

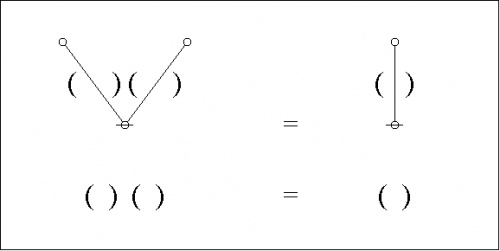

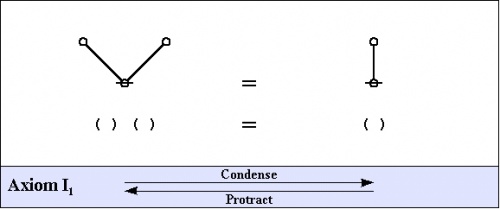

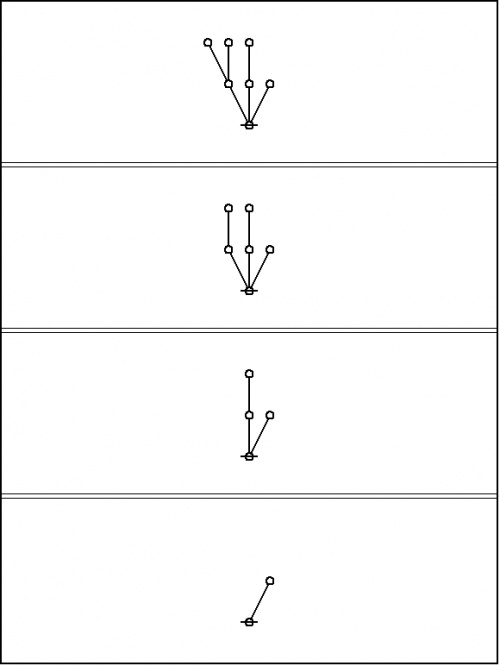

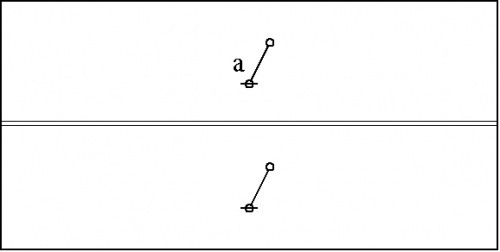

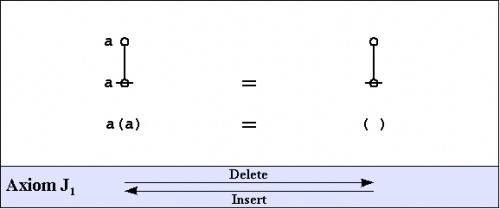

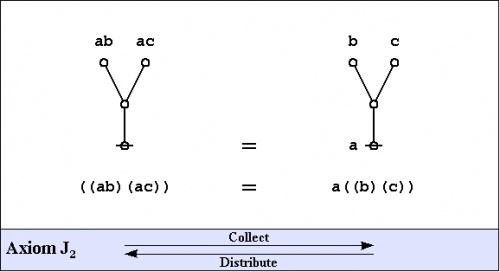

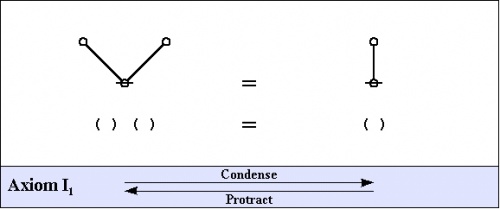

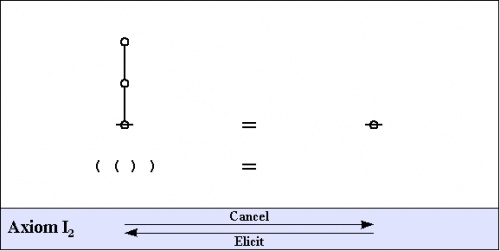

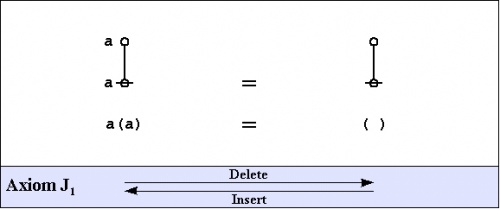

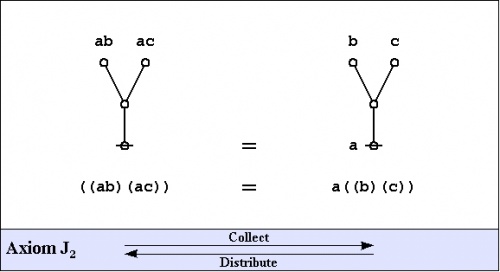

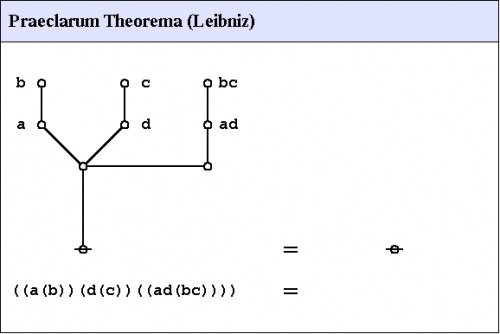

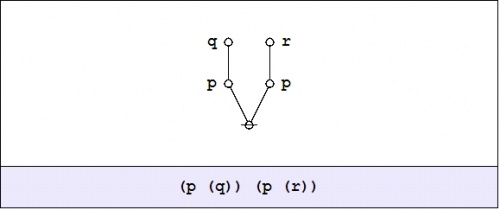

This much preparation allows us to present the two most basic axioms of logical graphs, shown in graph and string forms below, along with handy names for referring to the different directions of applying the axioms. | This much preparation allows us to present the two most basic axioms of logical graphs, shown in graph and string forms below, along with handy names for referring to the different directions of applying the axioms. | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_14_Banner.jpg|500px]] |

|- | |- | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_15_Banner.jpg|500px]] |

|} | |} | ||

| − | The ''parse graphs'' that we've been looking at so far are one step toward the ''pointer graphs'' that it takes to make trees live in computer memory, but they are still a couple of steps too abstract to properly suggest in much concrete detail the species of dynamic data structures that we need. | + | The ''parse graphs'' that we've been looking at so far are one step toward the ''pointer graphs'' that it takes to make trees live in computer memory, but they are still a couple of steps too abstract to properly suggest in much concrete detail the species of dynamic data structures that we need. I now proceed to flesh out the skeleton that I've drawn up to this point. |

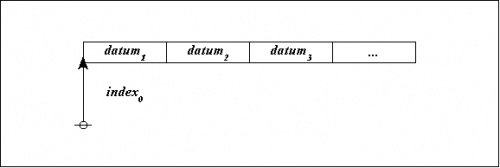

| − | Nodes in a graph depict ''records'' in computer memory. | + | Nodes in a graph depict ''records'' in computer memory. A record is a collection of data that can be thought to reside at a specific ''address''. For semioticians, an address can be recognized as a type of index, and is commonly spoken of, on analogy with demonstrative pronouns, as a ''pointer'', even among computer programmers who are otherwise innocent of semiotics. |

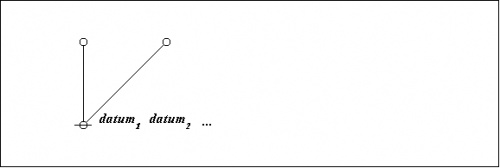

At the next level of concreteness, a pointer-record structure is represented as follows: | At the next level of concreteness, a pointer-record structure is represented as follows: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_11_Visible_Frame.jpg|500px]] |

|} | |} | ||

| − | This portrays the pointer <math>\mathit{index} | + | This portrays the pointer <math>\mathit{index}_{\,0}~\!</math> as the address of a record that contains the following data: |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | <math>\mathit{datum} | + | | <math>\mathit{datum}_{\,1}, \mathit{datum}_{\,2}, \mathit{datum}_{\,3}, \ldots,~\!</math> and so on. |

|} | |} | ||

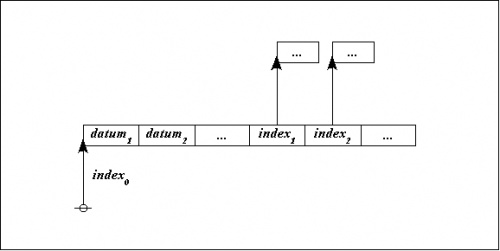

| Line 77: | Line 79: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_12_Visible_Frame.jpg|500px]] |

|} | |} | ||

| − | Back at the abstract level, it takes three nodes to represent the three data records, with a root node connected to two other nodes. | + | Back at the abstract level, it takes three nodes to represent the three data records, with a root node connected to two other nodes. The ordinary bits of data are then treated as labels on the nodes: |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_13_Visible_Frame.jpg|500px]] |

|} | |} | ||

Notice that, with rooted trees like these, drawing the arrows is optional, since singling out a unique node as the root induces a unique orientation on all the edges of the tree, ''up'' being the same as ''away from the root''. | Notice that, with rooted trees like these, drawing the arrows is optional, since singling out a unique node as the root induces a unique orientation on all the edges of the tree, ''up'' being the same as ''away from the root''. | ||

| − | We have | + | We have treated in some detail various forms of the initial equation or logical axiom that is formulated in string form as <math>{}^{\backprime\backprime} \texttt{(} ~ \texttt{(} ~ \texttt{)} ~ \texttt{)} = \quad {}^{\prime\prime}.~\!</math> For the sake of comparison, let's record the plane-embedded and topological dual forms of the axiom that is formulated in string form as <math>{}^{\backprime\backprime} \texttt{(} ~ \texttt{)(} ~ \texttt{)} = \texttt{(} ~ \texttt{)} {}^{\prime\prime}.~\!</math> |

First the plane-embedded maps: | First the plane-embedded maps: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_7_Visible_Frame.jpg|500px]] |

|} | |} | ||

| − | Next the | + | Next the plane-embedded maps and their dual trees superimposed: |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_8_Visible_Frame.jpg|500px]] |

|} | |} | ||

| Line 105: | Line 107: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_9_Visible_Frame.jpg|500px]] |

|} | |} | ||

| Line 111: | Line 113: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_10_Visible_Frame.jpg|500px]] |

|} | |} | ||

==Categories of structured individuals== | ==Categories of structured individuals== | ||

| − | We have at this point enough material to begin thinking about the forms of analogy, iconicity, metaphor, morphism | + | We have at this point enough material to begin thinking about the forms of analogy, iconicity, metaphor, or morphism that arise in the interpretation of logical graphs as logical propositions, in particular, the logically dual modes of interpretation that Peirce developed under the names of ''entitative graphs'' and ''existential graphs''. |

By way of providing a conceptual-technical framework for organizing that discussion, let me introduce the concept of a ''category of structured individuals'' (COSI). There may be some cause for some readers to rankle at the very idea of a ''structured individual'', for taking the notion of an individual in its strictest etymology would render it absurd that an atom could have parts, but all we mean by ''individual'' in this context is an individual by dint of some conversational convention currently in play, not an individual on account of its intrinsic indivisibility. Incidentally, though, it will also be convenient to take in the case of a class or collection of individuals with no pertinent inner structure as a trivial case of a COSI. | By way of providing a conceptual-technical framework for organizing that discussion, let me introduce the concept of a ''category of structured individuals'' (COSI). There may be some cause for some readers to rankle at the very idea of a ''structured individual'', for taking the notion of an individual in its strictest etymology would render it absurd that an atom could have parts, but all we mean by ''individual'' in this context is an individual by dint of some conversational convention currently in play, not an individual on account of its intrinsic indivisibility. Incidentally, though, it will also be convenient to take in the case of a class or collection of individuals with no pertinent inner structure as a trivial case of a COSI. | ||

| − | + | It seems natural to think ''category of structured individuals'' when the individuals in question have a whole lot of internal structure but ''collection of structured items'' when the individuals have a minimal amount of internal structure. For example, any set is a COSI, so any relation in extension is a COSI, but a 1-adic relation is just a set of 1-tuples, that are in some lights indiscernible from their single components, and so its structured individuals have far less structure than the k-tuples of k-adic relations, when k exceeds one. This spectrum of differentiations among relational models will be useful to bear in mind when the time comes to say what distinguishes relational thinking proper from 1-adic and 2-adic thinking, that constitute its degenerate cases. | |

Still on our way to saying what brands of iconicity are worth buying, at least when it comes to graphical systems of logic, it will useful to introduce one more distinction that affects the types of mappings that can be formed between two COSI's. | Still on our way to saying what brands of iconicity are worth buying, at least when it comes to graphical systems of logic, it will useful to introduce one more distinction that affects the types of mappings that can be formed between two COSI's. | ||

| Line 128: | Line 130: | ||

Because I plan this time around a somewhat leisurely excursion through the primordial wilds of logics that were so intrepidly explored by C.S. Peirce and again in recent times revisited by George Spencer Brown, let me just give a few extra pointers to those who wish to run on ahead of this torturous tortoise pace: | Because I plan this time around a somewhat leisurely excursion through the primordial wilds of logics that were so intrepidly explored by C.S. Peirce and again in recent times revisited by George Spencer Brown, let me just give a few extra pointers to those who wish to run on ahead of this torturous tortoise pace: | ||

| − | * Jon Awbrey | + | :* Jon Awbrey, [http://intersci.ss.uci.edu/wiki/index.php/Propositional_Equation_Reasoning_Systems Propositional Equation Reasoning Systems]. |

| − | * Lou Kauffman | + | |

| + | :* Lou Kauffman, [http://www.math.uic.edu/~kauffman/Arithmetic.htm Box Algebra, Boundary Mathematics, Logic, and Laws of Form]. | ||

| − | Two paces back I used the word | + | Two paces back I used the word ''category'' in a way that will turn out to be not too remote a cousin of its present day mathematical bearing, but also in way that's not unrelated to Peirce's theory of categories. |

When I call to mind a ''category of structured individuals'' (COSI), I get a picture of a certain form, with blanks to be filled in as the thought of it develops, that can be sketched at first like so: | When I call to mind a ''category of structured individuals'' (COSI), I get a picture of a certain form, with blanks to be filled in as the thought of it develops, that can be sketched at first like so: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | Category @ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | Individuals o ... o | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | Structures o->-o->-o o->-o->-o | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| Line 156: | Line 159: | ||

The various glyphs of this picturesque hierarchy serve to remind us that a COSI in general consists of many individuals, which in spite of their calling as such may have specific structures involving the ordering of their component parts. Of course, this generic picture may have degenerate realizations, as when we have a 1-adic relation, that may be viewed in most settings as nothing different than a set: | The various glyphs of this picturesque hierarchy serve to remind us that a COSI in general consists of many individuals, which in spite of their calling as such may have specific structures involving the ordering of their component parts. Of course, this generic picture may have degenerate realizations, as when we have a 1-adic relation, that may be viewed in most settings as nothing different than a set: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | Category @ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | / \ | |

| − | + | Individuals o ... o | |

| − | + | | | | |

| − | + | | | | |

| − | + | | | | |

| − | + | Structures o ... o | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| − | The practical use of Peirce's categories is simply to organize our thoughts about what sorts of formal models are demanded by a material situation, for instance, a domain of phenomena from atoms to biology to culture. To say that | + | The practical use of Peirce's categories is simply to organize our thoughts about what sorts of formal models are demanded by a material situation, for instance, a domain of phenomena from atoms to biology to culture. To say that “''k''-ness” is involved in a phenomenon is simply to say that we need ''k''-adic relations to model it adequately, and that the phenomenon itself appears to demand nothing less. Aside from this, Peirce's realization that ''k''-ness for ''k'' = 1, 2, 3 affords us with a sufficient basis for all that we need to model is a formal fact that depends on a particular theorem in the logic of relatives. If it weren't for that, there would hardly be any reason to single out three. |

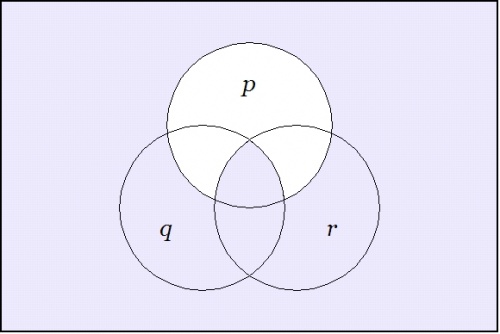

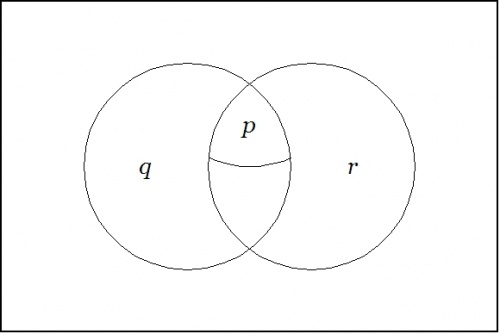

| − | In order to discuss the various forms of iconicity that might be involved in the application of Peirce's logical graphs and their kind to the object domain of logic itself, we will need to bring out two or three | + | In order to discuss the various forms of iconicity that might be involved in the application of Peirce's logical graphs and their kind to the object domain of logic itself, we will need to bring out two or three ''categories of structured individuals'' (COSIs), depending on how one counts. These are called the ''object domain'', the ''sign domain'', and the ''interpretant sign domain'', which may be written <math>{O, S, I},~\!</math> respectively, or <math>{X, Y, Z},~\!</math> respectively, depending on the style that fits the current frame of discussion. |

| − | For the time being, | + | For the time being, we will be considering systems where the sign domain and the interpretant domain are the same sets of entities, although, of course, their roles in a given ''[[sign relation]]'', say, <math>L \subseteq O \times S \times I</math> or <math>L \subseteq X \times Y \times Z,</math> remain as distinct as ever. We may use the term ''semiotic domain'' for the common set of elements that constitute the signs and the interpretant signs in any setting where the sign domain and the interpretant domain are equal as sets. |

| − | + | With respect to the ''alpha level'', ''primary arithmetic'', or ''zeroth order'' of consideration that we have so far introduced, the sign domain is any one of the several ''formal languages'' that we have placed in one-to-one correspondence with each other, namely, the languages of non-intersecting plane closed curves, well-formed parenthesis strings, and rooted trees. The interpretant sign domain will for the present be taken to be any one of the same languages, and so we may refer to any of them indifferently as the ''semiotic domain''. | |

Briefly if roughly put, icons are signs that denote their objects by virtue of sharing properties with them. To put it a bit more fully, icons are signs that receive their interpretant signs on account of having specific properties in common with their objects. | Briefly if roughly put, icons are signs that denote their objects by virtue of sharing properties with them. To put it a bit more fully, icons are signs that receive their interpretant signs on account of having specific properties in common with their objects. | ||

| − | The family of related relationships that fall under the headings of analogy, icon, metaphor, model, simile, simulation, and so on forms an extremely important complex of ideas in mathematics, there being recognized under the generic idea of | + | The family of related relationships that fall under the headings of analogy, icon, metaphor, model, simile, simulation, and so on forms an extremely important complex of ideas in mathematics, there being recognized under the generic idea of ''structure-preserving mappings'' and commonly formalized in the language of homomorphisms, morphisms, or ''arrows'', depending on the operative level of abstraction that's in play. |

To consider how a system of logical graphs, taken together as a semiotic domain, might bear an iconic relationship to a system of logical objects that make up our object domain, we will next need to consider what our logical objects are. | To consider how a system of logical graphs, taken together as a semiotic domain, might bear an iconic relationship to a system of logical objects that make up our object domain, we will next need to consider what our logical objects are. | ||

| − | A popular answer, if by popular one means that both Peirce and Frege agreed on it, is to say that our ultimate logical objects are without loss of generality most conveniently referred to as Truth and Falsity. If nothing else, it serves the end of beginning simply to go along with this thought for a while, and so we can start with an object domain that consists of just two objects or | + | A popular answer, if by popular one means that both Peirce and Frege agreed on it, is to say that our ultimate logical objects are without loss of generality most conveniently referred to as Truth and Falsity. If nothing else, it serves the end of beginning simply to go along with this thought for a while, and so we can start with an object domain that consists of just two ''objects'' or ''values'', to wit, <math>O = \mathbb{B} = \{ \mathrm{false}, \mathrm{true} \}.</math> |

| − | Given those two categories of structured individuals, namely, | + | Given those two categories of structured individuals, namely, <math>O = \mathbb{B} = \{ \mathrm{false}, \mathrm{true} \}</math> and <math>S = \{ \text{logical graphs} \},~\!</math> the next task is to consider the brands of morphisms from <math>S~\!</math> to <math>O~\!</math> that we might reasonably have in mind when we speak of the ''arrows of interpretation''. |

| − | With the aim of embedding our consideration of logical graphs, as seems most fitting, within Peirce's theory of triadic sign relations, we have declared the first layers of our object, sign, and interpretant domains. As we often do in formal studies, we've taken the sign and interpretant domains to be the same set, | + | With the aim of embedding our consideration of logical graphs, as seems most fitting, within Peirce's theory of triadic sign relations, we have declared the first layers of our object, sign, and interpretant domains. As we often do in formal studies, we've taken the sign and interpretant domains to be the same set, <math>S = I,~\!</math> calling it the ''semiotic domain'', or, as I see that I've done in some other notes, the ''syntactic domain''. |

Truth and Falsity, the objects that we've so far declared, are recognizable as abstract objects, and like so many other hypostatic abstractions that we use they have their use in moderating between a veritable profusion of more concrete objects and more concrete signs, in ''factoring complexity'' as some people say, despite the fact that some complexities are irreducible in fact. | Truth and Falsity, the objects that we've so far declared, are recognizable as abstract objects, and like so many other hypostatic abstractions that we use they have their use in moderating between a veritable profusion of more concrete objects and more concrete signs, in ''factoring complexity'' as some people say, despite the fact that some complexities are irreducible in fact. | ||

| Line 201: | Line 204: | ||

As agents of systems, whether that system is our own physiology or our own society, we move through what we commonly imagine to be a continuous manifold of states, but with distinctions being drawn in that space that are every bit as compelling to us, and often quite literally, as the difference between life and death. So the relation of discretion to continuity is not one of those issues that we can take lightly, or simply dissolve by choosing a side and ignoring the other, as we may imagine in abstraction. I'll try to get back to this point later, one in a long list of cautionary notes that experience tells me has to be attached to every tale of our pilgrimage, but for now we must get under way. | As agents of systems, whether that system is our own physiology or our own society, we move through what we commonly imagine to be a continuous manifold of states, but with distinctions being drawn in that space that are every bit as compelling to us, and often quite literally, as the difference between life and death. So the relation of discretion to continuity is not one of those issues that we can take lightly, or simply dissolve by choosing a side and ignoring the other, as we may imagine in abstraction. I'll try to get back to this point later, one in a long list of cautionary notes that experience tells me has to be attached to every tale of our pilgrimage, but for now we must get under way. | ||

| − | Returning to | + | Returning to <math>\mathrm{En}</math> and <math>\mathrm{Ex},</math> the two most popular interpretations of logical graphs, ones that happen to be dual to each other in a certain sense, let's see how they fly as ''hermeneutic arrows'' from the syntactic domain <math>S~\!</math> to the object domain <math>O,~\!</math> at any rate, as their trajectories can be spied in the radar of what George Spencer Brown called the ''primary arithmetic''. |

| − | Taking | + | Taking <math>\mathrm{En}~\!</math> and <math>\mathrm{Ex}~\!</math> as arrows of the form <math>\mathrm{En}, \mathrm{Ex} : S \to O,</math> at the level of arithmetic taking <math>S = \{ \text{rooted trees} \}~\!</math> and <math>O = \{ \mathrm{falsity}, \mathrm{truth} \},~\!</math> it is possible to factor each arrow across the domain <math>S_0~\!</math> that consists of a single rooted node plus a single rooted edge, in other words, the domain of formal constants <math>S_0 = \{ \ominus, \vert \} = \{</math>[[File:Rooted Node Big.jpg|16px]], [[File:Rooted Edge Big.jpg|12px]]<math>\}.~\!</math> This allows each arrow to be broken into a purely syntactic part <math>\mathrm{En}_\text{syn}, \mathrm{Ex}_\text{syn} : S \to S_0</math> and a purely semantic part <math>\mathrm{En}_\text{sem}, \mathrm{Ex}_\text{sem} : S_0 \to O.</math> |

As things work out, the syntactic factors are formally the same, leaving our dualing interpretations to differ in their semantic components alone. Specifically, we have the following mappings: | As things work out, the syntactic factors are formally the same, leaving our dualing interpretations to differ in their semantic components alone. Specifically, we have the following mappings: | ||

| − | + | {| cellpadding="6" | |

| − | + | | width="5%" | | |

| − | + | | width="5%" | <math>\mathrm{En}_\text{sem} :</math> | |

| + | | width="5%" align="center" | [[File:Rooted Node Big.jpg|16px]] | ||

| + | | width="5%" | <math>\mapsto</math> | ||

| + | | <math>\mathrm{false},</math> | ||

| + | |- | ||

| + | | | ||

| + | | | ||

| + | | align="center" | [[File:Rooted Edge Big.jpg|12px]] | ||

| + | | <math>\mapsto</math> | ||

| + | | <math>\mathrm{true}.</math> | ||

| + | |- | ||

| + | | | ||

| + | | <math>\mathrm{Ex}_\text{sem} :</math> | ||

| + | | align="center" | [[File:Rooted Node Big.jpg|16px]] | ||

| + | | <math>\mapsto</math> | ||

| + | | <math>\mathrm{true},</math> | ||

| + | |- | ||

| + | | | ||

| + | | | ||

| + | | align="center" | [[File:Rooted Edge Big.jpg|12px]] | ||

| + | | <math>\mapsto</math> | ||

| + | | <math>\mathrm{false}.</math> | ||

| + | |} | ||

| − | On the other side of the ledger, because the syntactic factors, | + | On the other side of the ledger, because the syntactic factors, <math>\mathrm{En}_\text{syn}</math> and <math>\mathrm{Ex}_\text{syn},</math> are indiscernible from each other, there is a syntactic contribution to the overall interpretation process that can be most readily investigated on purely formal grounds. That will be the task to face when next we meet on these lists. |

Cast into the form of a 3-adic sign relation, the situation before us can now be given the following shape: | Cast into the form of a 3-adic sign relation, the situation before us can now be given the following shape: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | Y | |

| − | + | Semiotic Domain | |

| − | + | o-------------------o | |

| − | + | | | | |

| − | + | | Rooted Trees | | |

| − | + | | | | |

| − | + | o-------------------o | |

| − | + | | | |

| − | + | | | |

| − | + | Syntactic | |

| − | + | Reduction | |

| − | + | | | |

| − | + | | | |

| − | + | v | |

| − | + | o-----------o En_sem o-----------o | |

| − | + | | |<--------------| o | | |

| − | + | | F T | | O | | | |

| − | + | | |<--------------| @ | | |

| − | + | o-----------o Ex_sem o-----------o | |

| − | + | X Y_0 | |

| − | + | Canonical | |

| − | + | Object Domain Sign Domain | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| − | The interpretation maps | + | The interpretation maps <math>\mathrm{En}, \mathrm{Ex} : Y \to X</math> are factored into (1) a common syntactic part and (2) a couple of distinct semantic parts: |

| − | + | {| align="center" cellpadding="10" width="90%" | |

| + | | | ||

| + | <math>\begin{array}{ll} | ||

| + | 1. & | ||

| + | \mathrm{En}_\text{syn} = \mathrm{Ex}_\text{syn} = \mathrm{E}_\text{syn} : Y \to Y_0 | ||

| + | \\[10pt] | ||

| + | 2. & | ||

| + | \mathrm{En}_\text{sem}, \mathrm{Ex}_\text{sem} : Y_0 \to X | ||

| + | \end{array}</math> | ||

| + | |} | ||

| − | and | + | The functional images of the syntactic reduction map <math>\mathrm{E}_\text{syn} : Y \to Y_0</math> are the two simplest signs or the most reduced pair of expressions, regarded as the rooted trees [[File:Rooted Node Big.jpg|16px]] and [[File:Rooted Edge Big.jpg|12px]], and these may be treated as the canonical representatives of their respective equivalence classes. |

| − | + | The more Peirce-sistent among you, on contemplating that last picture, will naturally ask, "What happened to the irreducible 3-adicity of sign relations in this portrayal of logical graphs?" | |

| − | + | {| align="center" cellpadding="10" | |

| − | |||

| − | |||

| − | |||

| − | {| align="center" | ||

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | Y | |

| − | + | Semiotic Domain | |

| − | + | o-------------------o | |

| − | + | | | | |

| − | + | | Rooted Trees | | |

| − | + | | | | |

| − | + | o-------------------o | |

| − | + | | | |

| − | + | | | |

| − | + | Syntactic | |

| − | + | Reduction | |

| − | + | | | |

| − | + | | | |

| − | + | v | |

| − | + | o-----------o En_sem o-----------o | |

| − | + | | |<--------------| o | | |

| − | + | | F T | | O | | | |

| − | + | | |<--------------| @ | | |

| − | + | o-----------o Ex_sem o-----------o | |

| − | + | X Y_0 | |

| − | + | Canonical | |

| − | + | Object Domain Sign Domain | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| − | The answer is that the last bastion of 3-adic irreducibility | + | The answer is that the last bastion of 3-adic irreducibility presides precisely in the duality of the dual interpretations <math>\mathrm{En}_\text{sem}</math> and <math>\mathrm{Ex}_\text{sem}.</math> To see this, consider the consequences of there being, contrary to all that we've assumed up to this point, some ultimately compelling reason to assert that the clean slate, the empty medium, the vacuum potential, whatever one wants to call it, is inherently more meaningful of either Falsity or Truth. This would issue in a conviction forthwith that the 3-adic sign relation involved in this case decomposes as a composition of a couple of functions, that is to say, reduces to a 2-adic relation. |

The duality of interpretation for logical graphs tells us that the empty medium, the tabula rasa, what Peirce called the ''Sheet of Assertion'' (SA) is a genuine symbol, not to be found among the degenerate species of signs that make up icons and indices, nor, as the SA has no parts, can it number icons or indices among its parts. What goes for the medium must go for all of the signs that it mediates. Thus we have the kinds of signs that Peirce in one place called "pure symbols", naming a selection of signs for basic logical operators specifically among them. | The duality of interpretation for logical graphs tells us that the empty medium, the tabula rasa, what Peirce called the ''Sheet of Assertion'' (SA) is a genuine symbol, not to be found among the degenerate species of signs that make up icons and indices, nor, as the SA has no parts, can it number icons or indices among its parts. What goes for the medium must go for all of the signs that it mediates. Thus we have the kinds of signs that Peirce in one place called "pure symbols", naming a selection of signs for basic logical operators specifically among them. | ||

| − | < | + | {| align="center" cellpadding="10" width="90%" |

| − | Every word is a symbol. Every sentence is a symbol. Every book is a symbol. Every representamen depending upon conventions is a symbol. Just as a photograph is an index having an icon incorporated into it, that is, excited in the mind by its force, so a symbol may have an icon or an index incorporated into it, that is, the active law that it is may require its interpretation to involve the calling up of an image, or a composite photograph of many images of past experiences, as ordinary common nouns and verbs do; or it may require its interpretation to refer to the actual surrounding circumstances of the occasion of its embodiment, like such words as 'that', 'this', 'I', 'you', 'which', 'here', 'now', 'yonder', etc. Or it may be pure symbol, neither 'iconic' nor 'indicative', like the words 'and', 'or', 'of', etc. | + | | |

| − | </ | + | <p>Thus the mode of being of the symbol is different from that of the icon and from that of the index. An icon has such being as belongs to past experience. It exists only as an image in the mind. An index has the being of present experience. The being of a symbol consists in the real fact that something surely will be experienced if certain conditions be satisfied. Namely, it will influence the thought and conduct of its interpreter.</p> |

| + | |||

| + | <p>Every word is a symbol. Every sentence is a symbol. Every book is a symbol. Every representamen depending upon conventions is a symbol. Just as a photograph is an index having an icon incorporated into it, that is, excited in the mind by its force, so a symbol may have an icon or an index incorporated into it, that is, the active law that it is may require its interpretation to involve the calling up of an image, or a composite photograph of many images of past experiences, as ordinary common nouns and verbs do; or it may require its interpretation to refer to the actual surrounding circumstances of the occasion of its embodiment, like such words as ''that'', ''this'', ''I'', ''you'', ''which'', ''here'', ''now'', ''yonder'', etc. Or it may be pure symbol, neither ''iconic'' nor ''indicative'', like the words ''and'', ''or'', ''of'', etc.</p> | ||

| + | |||

| + | <p>(Peirce, ''Collected Papers'', CP 4.447)</p> | ||

| + | |} | ||

Some will recall the many animadversions that we had on this topic, starting here: | Some will recall the many animadversions that we had on this topic, starting here: | ||

| − | * | + | :* Pure Symbols |

| − | + | :: [http://web.archive.org/web/20141124153003/http://stderr.org/pipermail/inquiry/2005-March/thread.html#2465 2005 • March] | |

| − | + | :: [http://web.archive.org/web/20120601160642/http://stderr.org/pipermail/inquiry/2005-April/thread.html#2517 2005 • April] | |

| − | * | + | :* Pure Symbols : Discussion |

| − | + | :: [http://web.archive.org/web/20141124153003/http://stderr.org/pipermail/inquiry/2005-March/thread.html#2466 2005 • March] | |

| − | + | :: [http://web.archive.org/web/20120601160642/http://stderr.org/pipermail/inquiry/2005-April/thread.html#2514 2005 • April] | |

| − | + | :: [http://web.archive.org/web/20120421003708/http://stderr.org/pipermail/inquiry/2005-May/thread.html#2654 2005 • May] | |

And some will find an ethical principle in this freedom of interpretation. The act of interpretation bears within it an inalienable degree of freedom. In consequence of this truth, as far as the activity of interpretation goes, freedom and responsibility are the very same thing. We cannot blame objects for what we say or what we think. We cannot blame symbols for what we do. We cannot escape our response ability. We cannot escape our freedom. | And some will find an ethical principle in this freedom of interpretation. The act of interpretation bears within it an inalienable degree of freedom. In consequence of this truth, as far as the activity of interpretation goes, freedom and responsibility are the very same thing. We cannot blame objects for what we say or what we think. We cannot blame symbols for what we do. We cannot escape our response ability. We cannot escape our freedom. | ||

| Line 315: | Line 350: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_14_Banner.jpg|500px]] |

|- | |- | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_15_Banner.jpg|500px]] |

|} | |} | ||

| − | Let <math>S\!</math> be the set of rooted trees and let <math>S_0\!</math> be the 2-element subset of <math>S\!</math> that consists of a rooted node and a rooted edge. | + | Let <math>S~\!</math> be the set of rooted trees and let <math>S_0~\!</math> be the 2-element subset of <math>S~\!</math> that consists of a rooted node and a rooted edge. |

{| align="center" cellpadding="10" style="text-align:center" | {| align="center" cellpadding="10" style="text-align:center" | ||

| − | | <math>S\!</math> | + | | <math>S~\!</math> |

| − | | <math>=\!</math> | + | | <math>=~\!</math> |

| − | | <math>\{ \text{rooted trees} \}\!</math> | + | | <math>\{ \text{rooted trees} \}~\!</math> |

|- | |- | ||

| − | | <math>S_0\!</math> | + | | <math>S_0~\!</math> |

| − | | <math>=\!</math> | + | | <math>=~\!</math> |

| − | | <math>\{ \ominus, \vert \} = \{</math>[[ | + | | <math>\{ \ominus, \vert \} = \{</math>[[File:Rooted Node Big.jpg|16px]], [[File:Rooted Edge Big.jpg|12px]]<math>\}~\!</math> |

|} | |} | ||

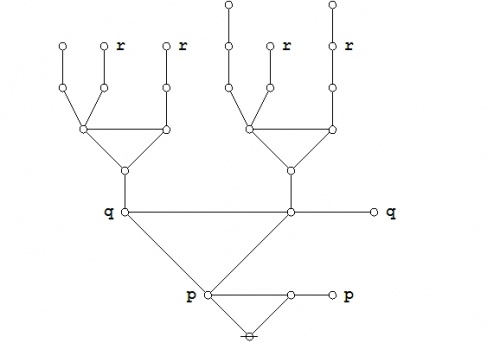

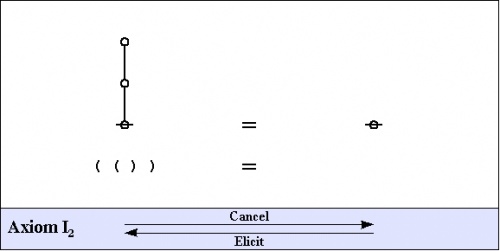

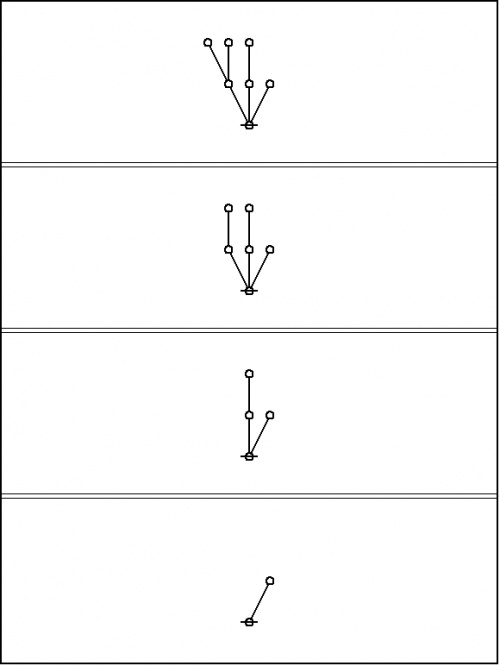

| − | Simple intuition, or a simple inductive proof, assures us that any rooted tree can be reduced by way of the arithmetic initials either to a root node [[ | + | Simple intuition, or a simple inductive proof, assures us that any rooted tree can be reduced by way of the arithmetic initials either to a root node [[File:Rooted Node Big.jpg|16px]] or else to a rooted edge [[File:Rooted Edge Big.jpg|12px]] . |

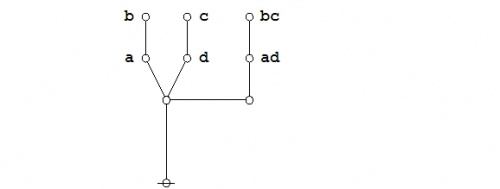

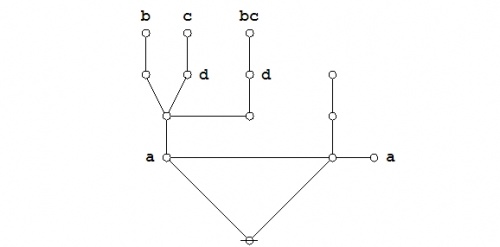

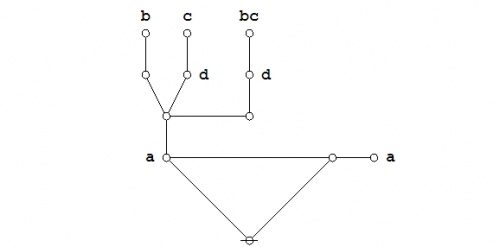

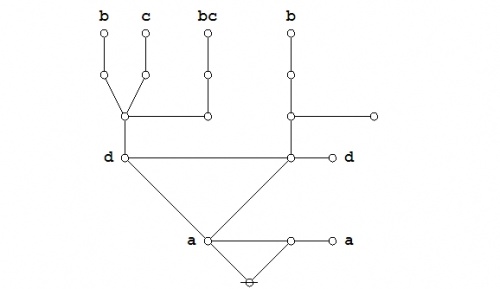

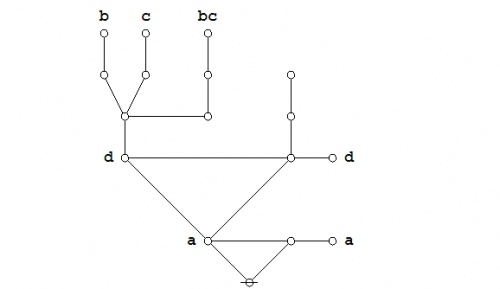

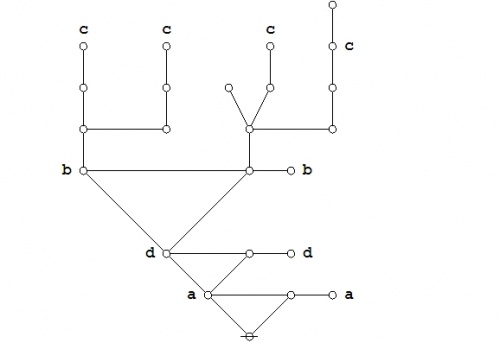

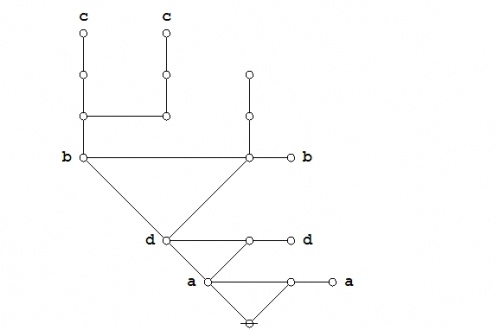

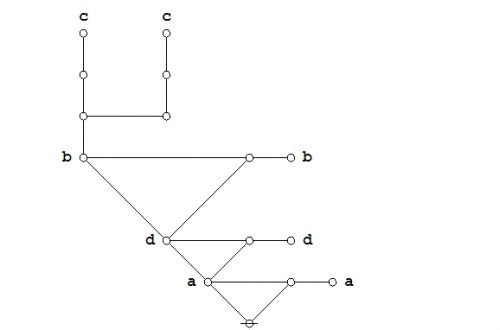

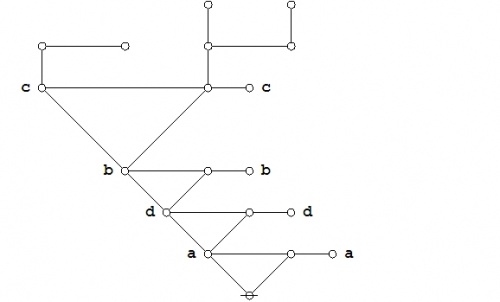

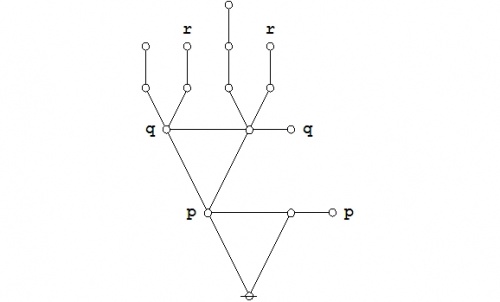

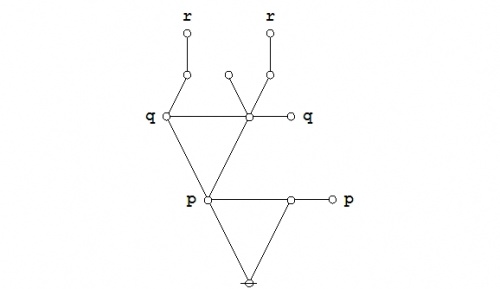

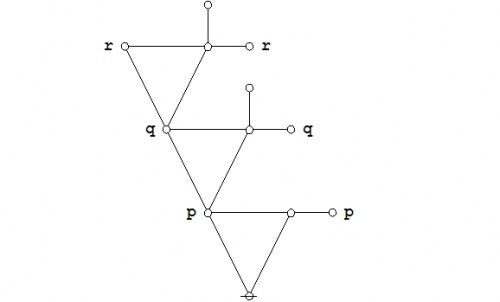

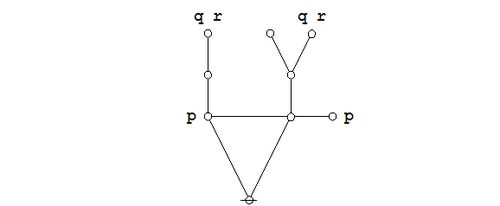

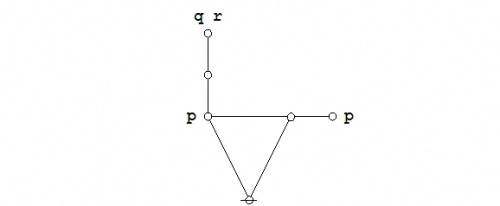

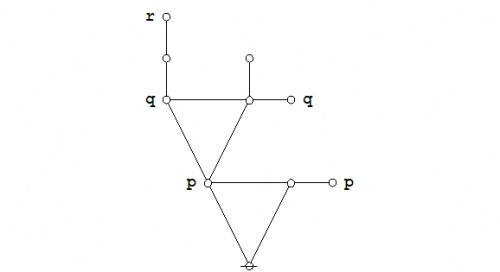

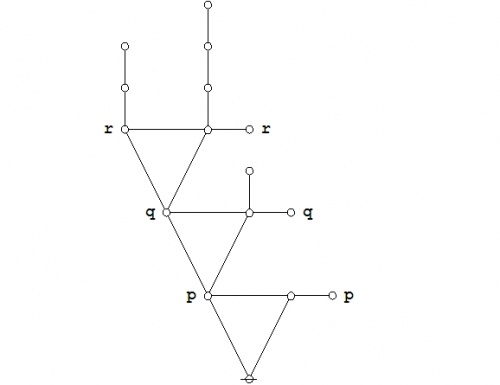

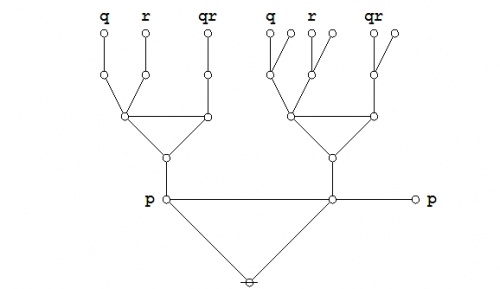

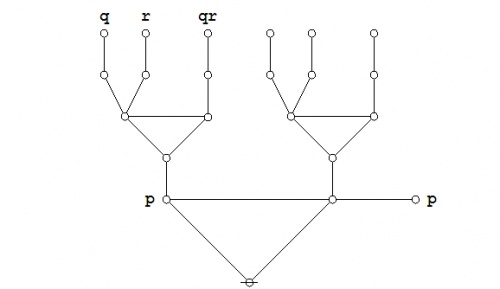

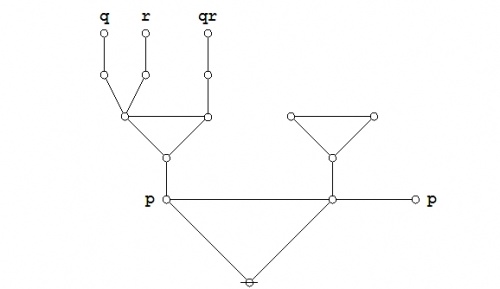

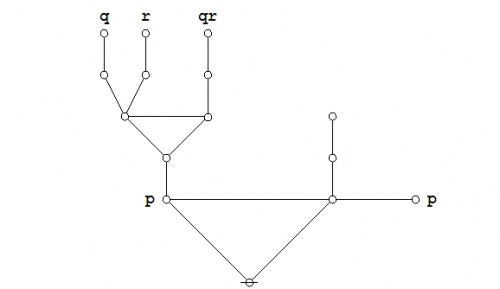

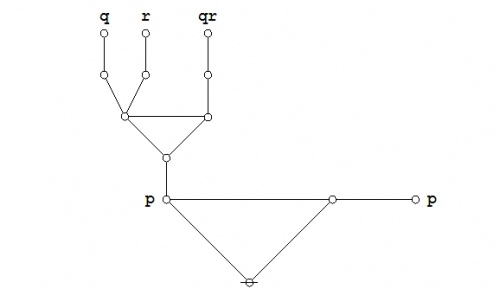

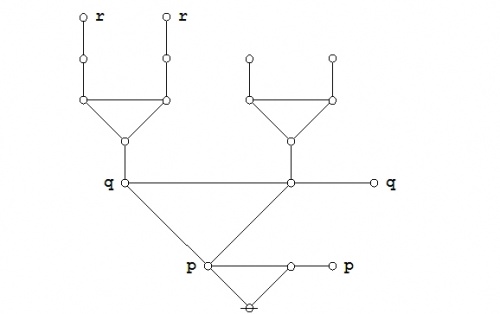

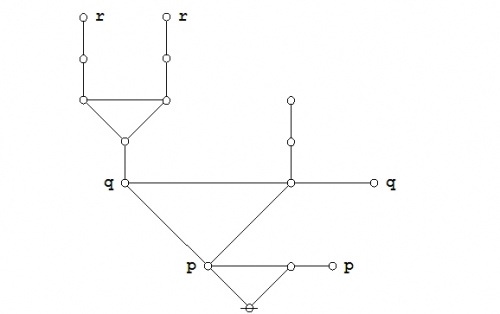

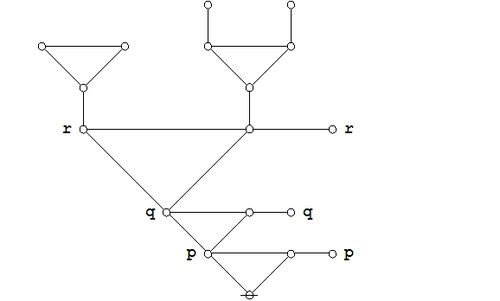

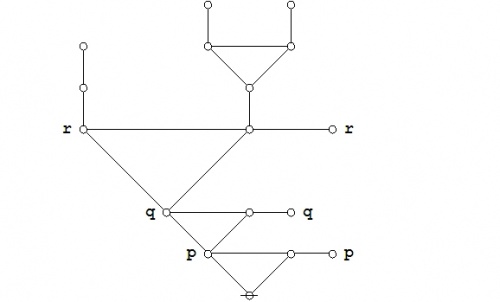

For example, consider the reduction that proceeds as follows: | For example, consider the reduction that proceeds as follows: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_16.jpg|500px]] |

|} | |} | ||

| Line 351: | Line 386: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_16.jpg|500px]] |

|} | |} | ||

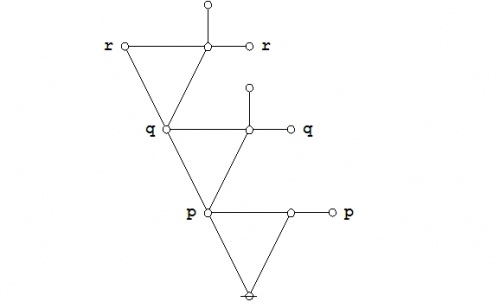

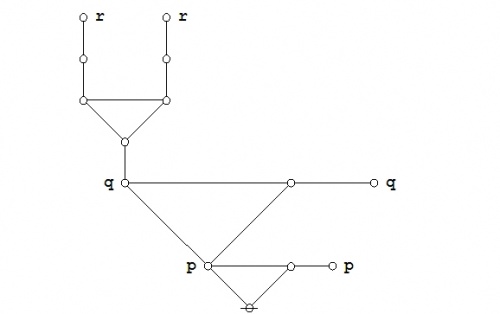

| Line 359: | Line 394: | ||

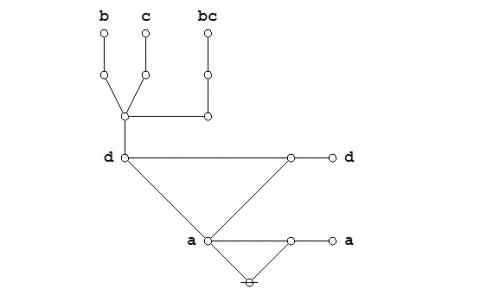

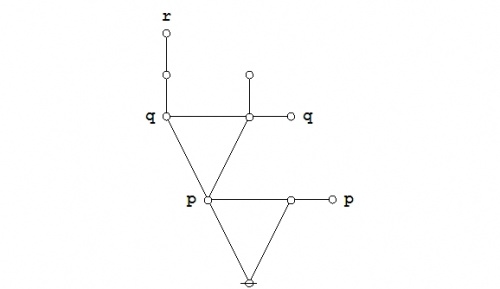

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_17.jpg|500px]] |

|} | |} | ||

Observations like that, made about an arithmetic of any variety, germinated by their summarizations, are the root of all algebra. | Observations like that, made about an arithmetic of any variety, germinated by their summarizations, are the root of all algebra. | ||

| − | Speaking of algebra, and having seen one example of an algebraic law, we might as well introduce the axioms of the ''primary algebra'', once again deriving their substance and their name from Charles | + | Speaking of algebra, and having seen one example of an algebraic law, we might as well introduce the axioms of the ''primary algebra'', once again deriving their substance and their name from Charles Sanders Peirce and George Spencer Brown, respectively. |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_18.jpg|500px]] |

|- | |- | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_19.jpg|500px]] |

|} | |} | ||

| Line 384: | Line 419: | ||

Thus, if you find youself in an argument with another interpreter who swears to the influence of some quality common to the object and the sign and that really does affect his or her conduct in regard to the two of them, then that argument is almost certainly bound to be utterly futile. I am sure we've all been there. | Thus, if you find youself in an argument with another interpreter who swears to the influence of some quality common to the object and the sign and that really does affect his or her conduct in regard to the two of them, then that argument is almost certainly bound to be utterly futile. I am sure we've all been there. | ||

| − | When I first became acquainted with the Entish and Extish hermenautics of logical graphs, back in the late great | + | When I first became acquainted with the Entish and Extish hermenautics of logical graphs, back in the late great 1960s, I was struck in the spirit of those times by what I imagined to be their Zen and Zenoic sensibilities, the ''tao is silent'' wit of the Zen mind being the empty mind, that seems to go along with the <math>\mathrm{Ex}~\!</math> interpretation, and the way from ''the way that's marked is not the true way'' to ''the mark that's marked is not the remarkable mark'' and to ''the sign that's signed is not the significant sign'' of the <math>\mathrm{En}~\!</math> interpretation, reminding us that the sign is not the object, no matter how apt the image. And later, when my discovery of the cactus graph extension of logical graphs led to the leimons of neural pools, where <math>\mathrm{En}~\!</math> says that truth is an active condition, while <math>\mathrm{Ex}~\!</math> says that sooth is a quiescent mind, all these themes got reinforced more still. |

We hold these truths to be self-iconic, but they come in complementary couples, in consort to the flip-side of the tao. | We hold these truths to be self-iconic, but they come in complementary couples, in consort to the flip-side of the tao. | ||

| Line 394: | Line 429: | ||

A ''sort'' of signs is more formally known as an ''equivalence class'' (EC). There are in general many sorts of sorts of signs that we might wish to consider in this inquiry, but let's begin with the sort of signs all of whose members denote the same object as their referent, a sort of signs to be henceforth referred to as a ''referential equivalence class'' (REC). | A ''sort'' of signs is more formally known as an ''equivalence class'' (EC). There are in general many sorts of sorts of signs that we might wish to consider in this inquiry, but let's begin with the sort of signs all of whose members denote the same object as their referent, a sort of signs to be henceforth referred to as a ''referential equivalence class'' (REC). | ||

| − | * | + | :* [http://web.archive.org/web/20150224133200/http://stderr.org/pipermail/inquiry/2005-October/thread.html#3104 Inquiry List • Futures Of Logical Graphs] |

| − | * | + | :* [http://web.archive.org/web/20120206123011/http://stderr.org/pipermail/inquiry/2005-October/003113.html Inquiry List • Futures Of Logical Graphs • Note 5] |

Toward the outset of this excursion, I mentioned the distinction between a ''pointwise-restricted iconic map'' or a ''pointedly rigid iconic map'' (PRIM) and a ''system-wide iconic map'' (SWIM). The time has come to make use of that mention. | Toward the outset of this excursion, I mentioned the distinction between a ''pointwise-restricted iconic map'' or a ''pointedly rigid iconic map'' (PRIM) and a ''system-wide iconic map'' (SWIM). The time has come to make use of that mention. | ||

| − | We are once again concerned with ''categories of structured items'' ( | + | We are once again concerned with ''categories of structured items'' (COSIs) and the categories of mappings between them, indeed, the two ideas are all but inseparable, there being many good reasons to consider the very notion of structure to be most clearly defined in terms of the brands of "arrows", maps, or morphisms between items that are admitted to the category in view. |

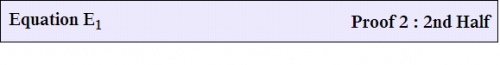

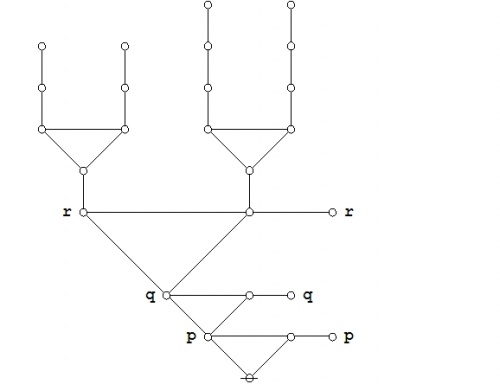

| − | At the level of the ''primary arithmetic'' | + | At the level of the ''primary arithmetic'', we have a set-up like this: |

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | Categories | + | Categories !O! !S! |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ Denotes / \ | |

| − | Individuals | + | Individuals {F} {T} <------ {...} {...} |

| − | + | | | /|\ /|\ | |

| − | + | | | / | \ / | \ | |

| − | + | | | / | \ / | \ | |

| − | Structures | + | Structures F T o o o o o o |

| − | + | ||

| − | + | o | |

| − | + | | | |

| − | + | o o o o | |

| − | + | | \ / | | |

| − | + | o o o o o o | |

| − | + | | | | \ / | | |

| − | + | F T @ @ @ @ @ @ | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| − | The object domain | + | The object domain <math>O~\!</math> is the boolean domain <math>\mathbb{B} = \{ \mathrm{falsity}, \mathrm{truth} \},</math> the semiotic domain <math>S~\!</math> is any of the spaces isomorphic to the set of rooted trees, matched-up parentheses, or unlabeled alpha graphs, and we treat a couple of ''denotation maps'' <math>\mathrm{den}_\text{en}, \mathrm{den}_\text{ex} : S \to O.</math> |

| − | Either one of the denotation maps induces the same partition of | + | Either one of the denotation maps induces the same partition of <math>S~\!</math> into RECs, a partition whose structure is suggested by the following two sets of strings: |

| − | + | {| align="center" cellpadding="10" width="90%" | |

| − | + | | | |

| − | + | <math>\begin{matrix} | |

| + | \{ | ||

| + | & {}^{\backprime\backprime} \texttt{~} {}^{\prime\prime}, | ||

| + | & {}^{\backprime\backprime} \texttt{((~))} {}^{\prime\prime}, | ||

| + | & {}^{\backprime\backprime} \texttt{((~)(~))} {}^{\prime\prime}, | ||

| + | & \ldots | ||

| + | & \} | ||

| + | \\[10pt] | ||

| + | \{ | ||

| + | & {}^{\backprime\backprime} \texttt{(~)} {}^{\prime\prime}, | ||

| + | & {}^{\backprime\backprime} \texttt{(~)(~)} {}^{\prime\prime}, | ||

| + | & {}^{\backprime\backprime} \texttt{(((~)))} {}^{\prime\prime}, | ||

| + | & \ldots | ||

| + | & \} | ||

| + | \end{matrix}</math> | ||

| + | |} | ||

These are of course the parenthesis strings that correspond to the rooted trees that are shown in the lower right corner of the Figure. | These are of course the parenthesis strings that correspond to the rooted trees that are shown in the lower right corner of the Figure. | ||

| Line 442: | Line 492: | ||

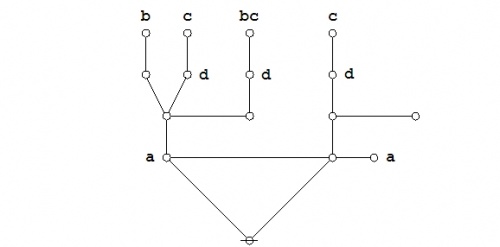

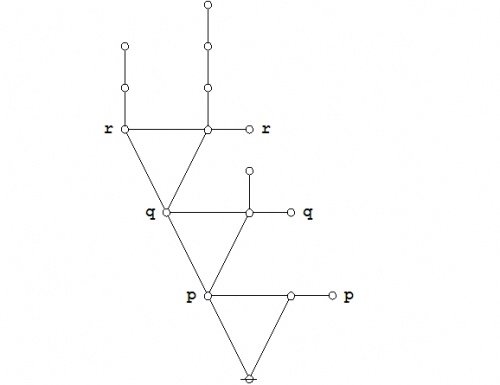

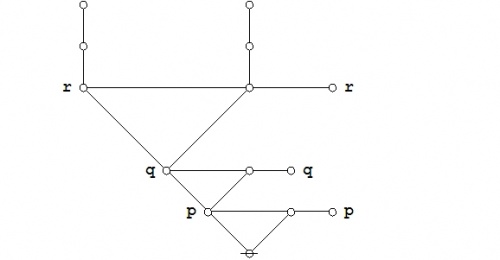

In thinking about mappings between categories of structured individuals, we can take each mapping in two parts. At the first level of analysis, there is the part that maps individuals to individuals. At the second level of analysis, there is the part that maps the structural parts of each individual to the structural parts of the individual that forms its counterpart under the first part of the mapping in question. | In thinking about mappings between categories of structured individuals, we can take each mapping in two parts. At the first level of analysis, there is the part that maps individuals to individuals. At the second level of analysis, there is the part that maps the structural parts of each individual to the structural parts of the individual that forms its counterpart under the first part of the mapping in question. | ||

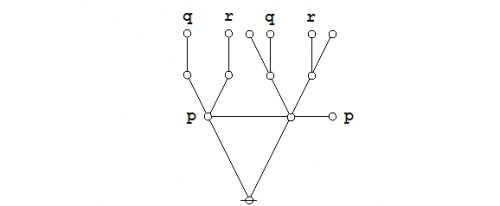

| − | The general scheme of things is suggested by the following Figure, where the mapping | + | The general scheme of things is suggested by the following Figure, where the mapping <math>f~\!</math> from COSI <math>U~\!</math> to COSI <math>V~\!</math> is analyzed in terms of a mapping <math>g~\!</math> that takes individuals to individuals, ignoring their inner structures, and a set of mappings <math>h_j,~\!</math> where <math>j~\!</math> ranges over the individuals of COSI <math>U,~\!</math> and where <math>h_j~\!</math> specifies just how the parts of <math>j~\!</math> map to the parts of <math>{g(j)},~\!</math> its counterpart under <math>{g}.~\!</math> |

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | U f V | |

| − | + | @ --------------------> @ | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ / \ | |

| − | + | / \ g / \ | |

| − | + | o ... o --------> o ... o | |

| − | + | / \ / \ / \ / \ | |

| − | + | / \ / \ / \ / \ | |

| − | + | / \ / \ / \ / \ | |

| − | + | o->-o->-o o->-o->-o o->-o->-o o->-o->-o | |

| − | + | \ / | |

| − | + | \ / | |

| − | + | \ / | |

| − | + | \ / | |

| − | + | \ h_j / | |

| − | + | o-------->----------o | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

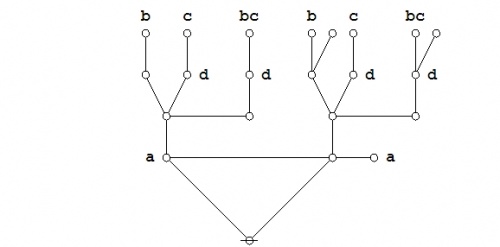

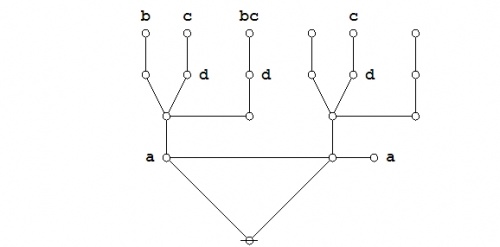

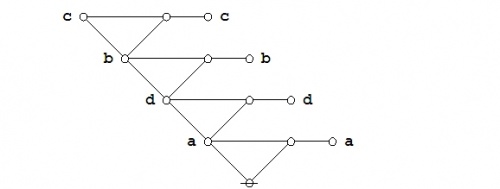

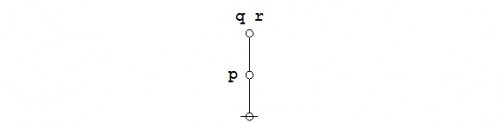

| − | Next time we'll apply this general scheme to the | + | Next time we'll apply this general scheme to the <math>\mathrm{En}~\!</math> and <math>\mathrm{Ex}~\!</math> interpretations of logical graphs, and see how it helps us to sort out the varieties of iconic mapping that are involved in that setting. |

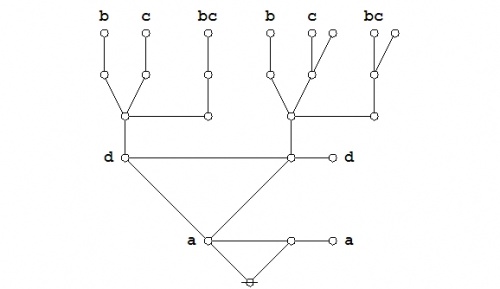

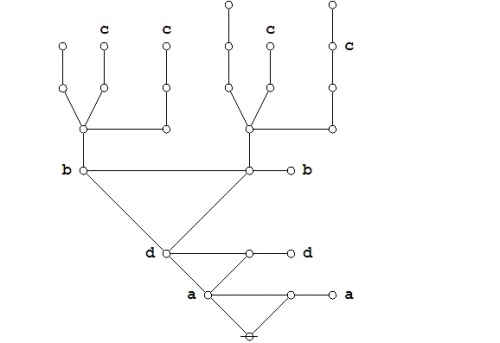

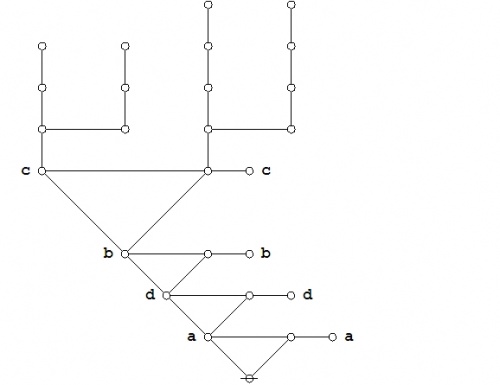

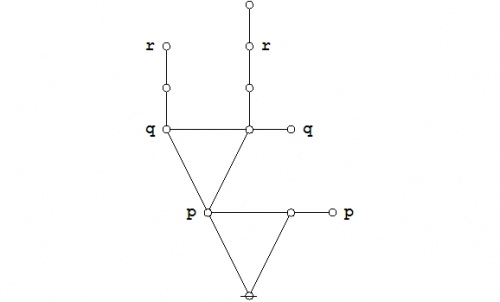

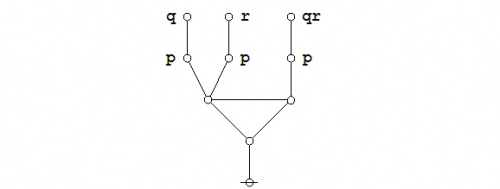

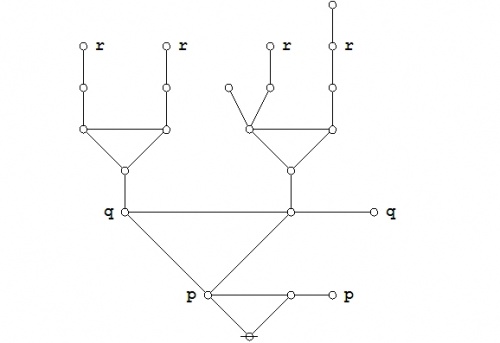

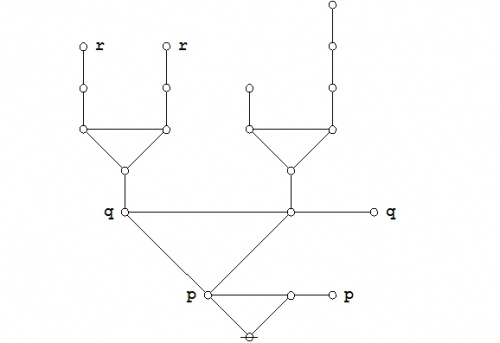

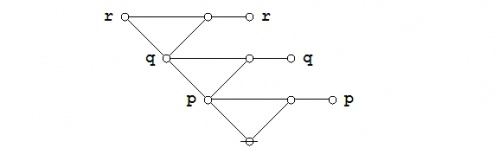

| − | Corresponding to the Entitative and Existential interpretations of the primary arithmetic, there are two distinct mappings from the sign domain | + | Corresponding to the Entitative and Existential interpretations of the primary arithmetic, there are two distinct mappings from the sign domain <math>S,~\!</math> containing the topological equivalents of bare and rooted trees, onto the object domain <math>O,~\!</math> containing the two objects whose conventional, ordinary, or meta-language names are ''falsity'' and ''truth'', respectively. |

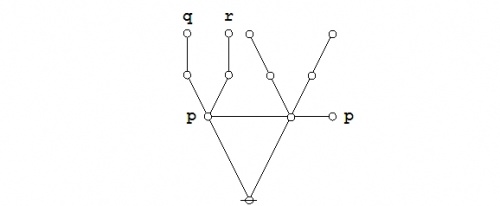

| − | The next two Figures suggest how one might view the interpretation maps as mappings from a COSI | + | The next two Figures suggest how one might view the interpretation maps as mappings from a COSI <math>S~\!</math> to a COSI <math>O.~\!</math> Here I have placed names of categories at the bottom, indices of individuals at the next level, and extended upward from there whatever structures the individuals may have. |

Here is the Figure for the Entitative interpretation: | Here is the Figure for the Entitative interpretation: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | o | |

| − | + | | | |

| − | + | o o o o | |

| − | + | | | \ / | |

| − | Structures | + | Structures F T @ @ ... @ @ ... |

| − | + | | | \ | / \ | / | |

| − | + | | | \ | / \ | / | |

| − | + | | | En \|/ \|/ | |

| − | Individuals | + | Individuals o o <----- o o |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | Categories | + | Categories !O! !S! |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| Line 503: | Line 553: | ||

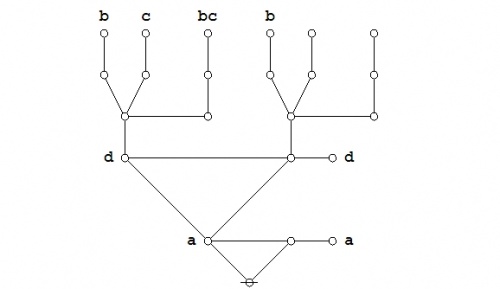

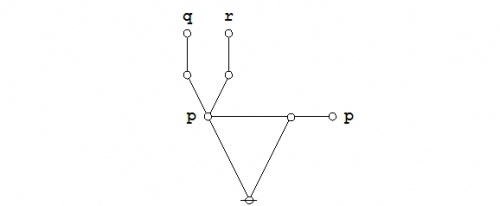

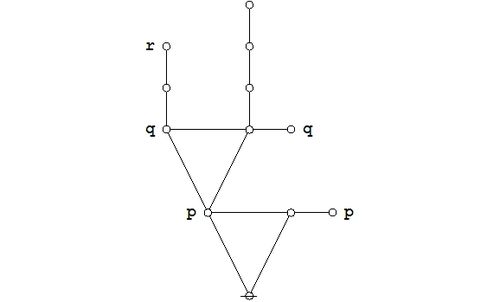

Here is the Figure for the Existential interpretation: | Here is the Figure for the Existential interpretation: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | o | |

| − | + | | | |

| − | + | o o o o | |

| − | + | | \ / | | |

| − | Structures | + | Structures F T @ @ ... @ @ ... |

| − | + | | | \ | / \ | / | |

| − | + | | | \ | / \ | / | |

| − | + | | | Ex \|/ \|/ | |

| − | Individuals | + | Individuals o o <----- o o |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | Categories | + | Categories !O! !S! |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| − | Note that the structure of a tree begins at its root, marked by an "O". The objects in | + | Note that the structure of a tree begins at its root, marked by an "O". The objects in <math>O~\!</math> have no further structure to speak of, so there is nothing much happening in the object domain <math>O~\!</math> between the level of individuals and the level of structures. In the sign domain <math>S~\!</math> the individuals are the parts of the partition into referential equivalence classes, each part of which contains a countable infinity of syntactic structures, rooted trees, or whatever form one views their structures taking. The sense of the Figures is that the interpretation under consideration maps the individual on the left side of <math>S~\!</math> to the individual on the left side of <math>O~\!</math> and maps the individual on the right side of <math>S~\!</math> to the individual on the right side of <math>O.~\!</math> |

An iconic mapping, that gets formalized in mathematical terms as a ''morphism'', is said to be a ''structure-preserving map''. This does not mean that all of the structure of the source domain is preserved in the map ''images'' of the target domain, but only ''some'' of the structure, that is, specific types of relation that are defined among the elements of the source and target, respectively. | An iconic mapping, that gets formalized in mathematical terms as a ''morphism'', is said to be a ''structure-preserving map''. This does not mean that all of the structure of the source domain is preserved in the map ''images'' of the target domain, but only ''some'' of the structure, that is, specific types of relation that are defined among the elements of the source and target, respectively. | ||

| − | For example, let's start with the archetype of all morphisms, namely, a ''linear function'' or a ''linear mapping'' | + | For example, let's start with the archetype of all morphisms, namely, a ''linear function'' or a ''linear mapping'' <math>f : X \to Y.</math> |

| − | To say that the function | + | To say that the function <math>f~\!</math> is ''linear'' is to say that we have already got in mind a couple of relations on <math>X~\!</math> and <math>Y~\!</math> that have forms roughly analogous to "addition tables", so let's signify their operation by means of the symbols <math>{}^{\backprime\backprime} \# {}^{\prime\prime}</math> for addition in <math>X~\!</math> and <math>{}^{\backprime\backprime} + {}^{\prime\prime}</math> for addition in <math>Y.~\!</math> |

| − | More exactly, the use of | + | More exactly, the use of <math>{}^{\backprime\backprime} \# {}^{\prime\prime}</math> refers to a 3-adic relation <math>L_X \subseteq X \times X \times X</math> that licenses the formula <math>a ~\#~ b = c</math> just when <math>(a, b, c)~\!</math> is in <math>L_X~\!</math> and the use of <math>{}^{\backprime\backprime} + {}^{\prime\prime}</math> refers to a 3-adic relation <math>L_Y \subseteq Y \times Y \times Y</math> that licenses the formula <math>p + q = r~\!</math> just when <math>(p, q, r)~\!</math> is in <math>L_Y.~\!</math> |

| − | In this setting | + | In this setting the mapping <math>f : X \to Y</math> is said to be ''linear'', and to ''preserve'' the structure of <math>L_X~\!</math> in the structure of <math>L_Y,~\!</math> if and only if <math>f(a ~\#~ b) = f(a) + f(b),</math> for all pairs <math>a, b~\!</math> in <math>X.~\!</math> In other words, the function <math>f~\!</math> ''distributes'' over the two additions, from <math>\#</math> to <math>+,~\!</math> just as if <math>f~\!</math> were a form of multiplication, analogous to <math>m(a + b) = ma + mb.~\!</math> |

| − | Writing this more directly in terms of the 3-adic relations | + | Writing this more directly in terms of the 3-adic relations <math>L_X~\!</math> and <math>L_Y~\!</math> instead of via their operation symbols, we would say that <math>f : X \to Y</math> is linear with regard to <math>L_X~\!</math> and <math>L_Y~\!</math> if and only if <math>(a, b, c)~\!</math> being in the relation <math>L_X~\!</math> determines that its map image <math>(f(a), f(b), f(c))~\!</math> be in <math>L_Y.~\!</math> To see this, observe that <math>(a, b, c)~\!</math> being in <math>L_X~\!</math> implies that <math>c = a ~\#~ b,</math> and <math>(f(a), f(b), f(c))~\!</math> being in <math>L_Y~\!</math> implies that <math>f(c) = f(a) + f(b),~\!</math> so we have that <math>f(a ~\#~ b) = f(c) = f(a) + f(b),</math> and the two notions are one. |

The idea of mappings that preserve 3-adic relations should ring a few bells here. | The idea of mappings that preserve 3-adic relations should ring a few bells here. | ||

| − | Once again into the breach between the interpretations | + | Once again into the breach between the interpretations <math>\mathrm{En}, \mathrm{Ex} : S \to O,</math> drawing but a single Figure in the sand and relying on the reader to recall: |

| − | + | {| align="center" cellpadding="10" width="90%" | |

| + | | | ||

| + | <p><math>\mathrm{En}~\!</math> maps every tree on the left side of <math>S~\!</math> to the left side of <math>O.~\!</math></p> | ||

| − | + | <p><math>\mathrm{En}~\!</math> maps every tree on the right side of <math>S~\!</math> to the right side of <math>O.~\!</math></p> | |

| + | |- | ||

| + | | | ||

| + | <p><math>\mathrm{Ex}~\!</math> maps every tree on the left side of <math>S~\!</math> to the right side of <math>O.~\!</math></p> | ||

| − | {| align="center" | + | <p><math>\mathrm{Ex}~\!</math> maps every tree on the right side of <math>S~\!</math> to the left side of <math>O.~\!</math></p> |

| + | |} | ||

| + | |||

| + | {| align="center" cellpadding="10" | ||

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | o | |

| − | + | | | |

| − | + | o o o o | |

| − | + | | | \ / | |

| − | Structures | + | Structures F T @ @ ... @ @ ... |

| − | + | | | \ | / \ | / | |

| − | + | | | \ | / \ | / | |

| − | + | | | En \|/ \|/ | |

| − | Individuals | + | Individuals o o <----- o o |

| − | + | \ / Ex \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | + | \ / \ / | |

| − | Categories | + | Categories !O! !S! |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| − | + | Those who wish to say that these logical signs are iconic of their logical objects must not only find some reason that logic itself singles out one interpretation over the other, but, even if they succeed in that, they must further make us believe that every sign for Truth is iconic of Truth, while every sign for Falsity is iconic of Falsity. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | One of the questions that arises at this point, where we have a very small object domain <math>O = \{ \mathrm{falsity}, \mathrm{truth} \}</math> and a very large sign domain <math>S \cong \{ \text{rooted trees} \},</math> is the following: | |

| − | + | :* Why do we have so many ways of saying the same thing? | |

| − | + | In other words, what possible utility is there in a language having so many signs to denote the same object? Why not just restrict the language to a canonical collection of signs, each of which denotes one and only one object, exclusively and uniquely? Indeed, language reformers from time to time have proposed the design of languages that have just this property, but I think this is one of those places where natural evolution has luckily hit on a better plan than the sorts of intentional design that inexperienced designers typically craft. | |

| − | Of course, very little of this can be apparent at the level of primary arithmetic, but I think it should become a little more obvious as we enter the primary algebra. | + | The answer to the puzzle of semiotic multiplicity appears to have something to do with the use of language in interacting with a complex external world. The objective world throws its multiplicity of problems at us, and the first duty of language is to provide some expression of their structure, on the fly, as quickly as possible, in real time, as they come in, no matter how obscurely our quick and dirty expressions of the problematic situation might otherwise be. Of course, very little of this can be apparent at the level of primary arithmetic, but I think it should become a little more obvious as we enter the primary algebra. |

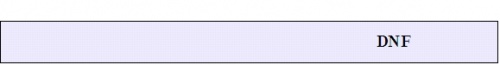

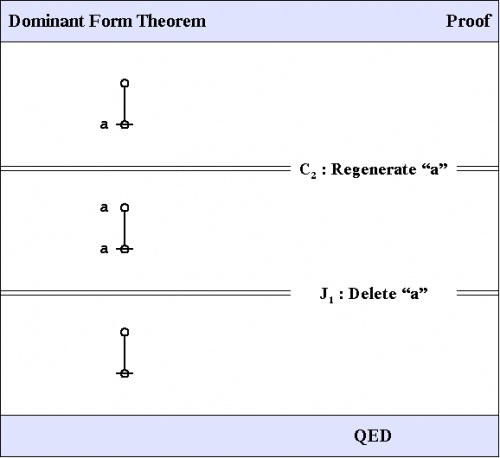

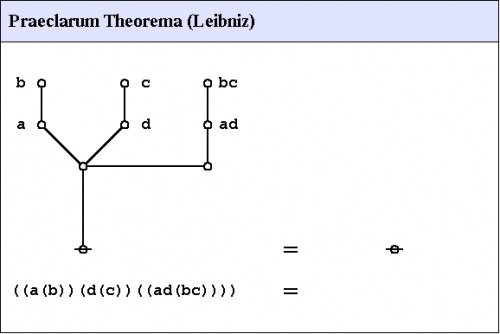

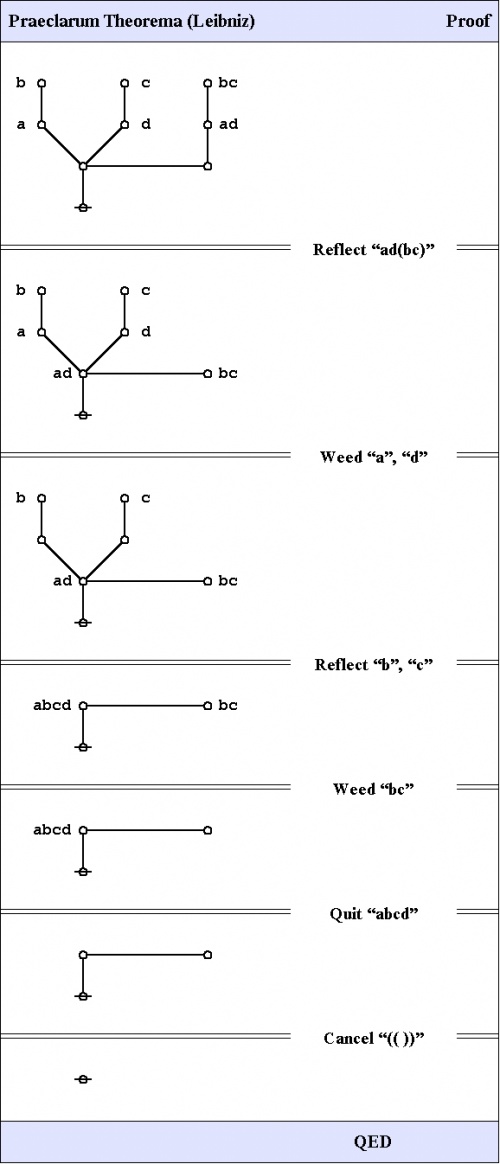

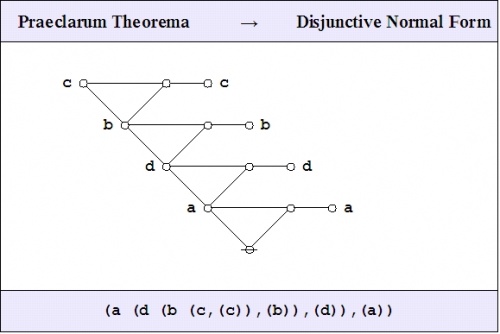

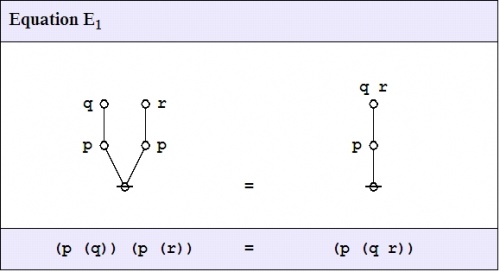

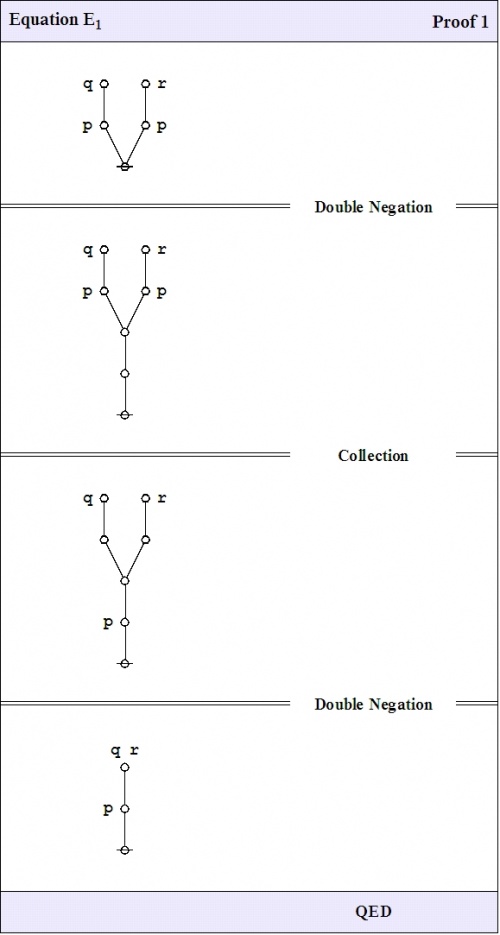

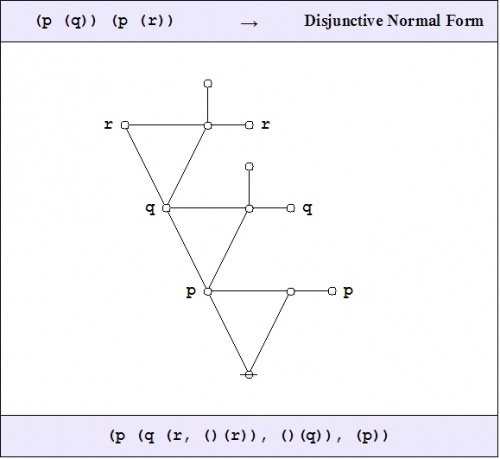

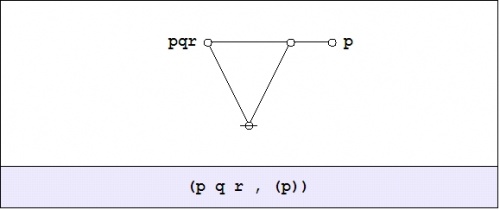

| − | I will now give a reference version of the CSP | + | I will now give a reference version of the CSP–GSB axioms for the abstract calculus that is formally recognizable in several senses as giving form to propositional logic. |

| − | The first order of business is to give the exact forms of the axioms that I use, devolving from Peirce's | + | The first order of business is to give the exact forms of the axioms that I use, devolving from Peirce's Logical Graphs via Spencer-Brown's ''Laws of Form'' (LOF). In formal proofs, I will use a variation of the annotation scheme from LOF to mark each step of the proof according to which axiom, or ''initial'', is being invoked to justify the corresponding step of syntactic transformation, whether it applies to graphs or to strings. |

| − | The axioms are just four in number, and they come in a couple of flavors: the ''arithmetic initials | + | The axioms are just four in number, and they come in a couple of flavors: the ''arithmetic initials'' <math>I_1~\!</math> and <math>I_2,~\!</math> and the ''algebraic initials'' <math>J_1~\!</math> and <math>J_2.~\!</math> |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_20.jpg|500px]] |

|- | |- | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_21.jpg|500px]] |

|- | |- | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_22.jpg|500px]] |

|- | |- | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_23.jpg|500px]] |

|} | |} | ||

| − | Notice that all of the axioms in this set have the form of equations. This means that all of the inference steps they allow are reversible. In the proof annotation scheme below, I will use a double bar | + | Notice that all of the axioms in this set have the form of equations. This means that all of the inference steps they allow are reversible. In the proof annotation scheme below, I will use a double bar <math>=~\!=~\!=~\!=~\!=</math> to mark this fact, but I may at times leave it to the reader to pick which direction is the one required for applying the indicated axiom. |

==Frequently used theorems== | ==Frequently used theorems== | ||

| Line 613: | Line 667: | ||

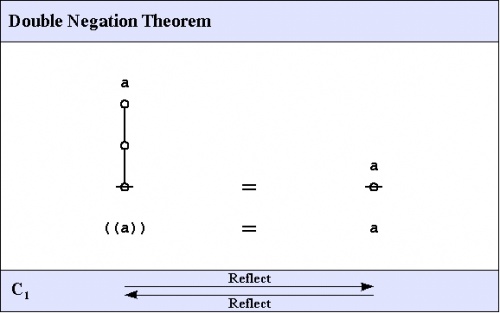

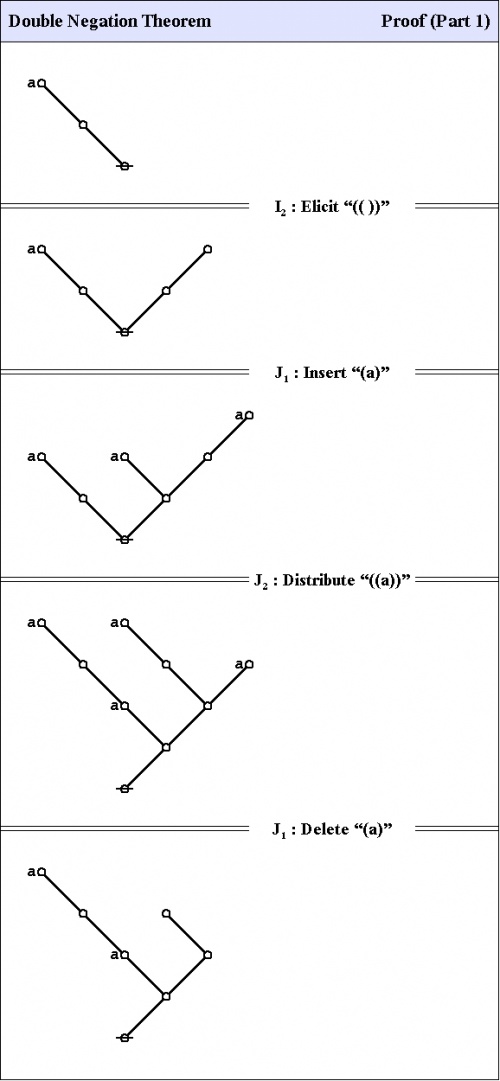

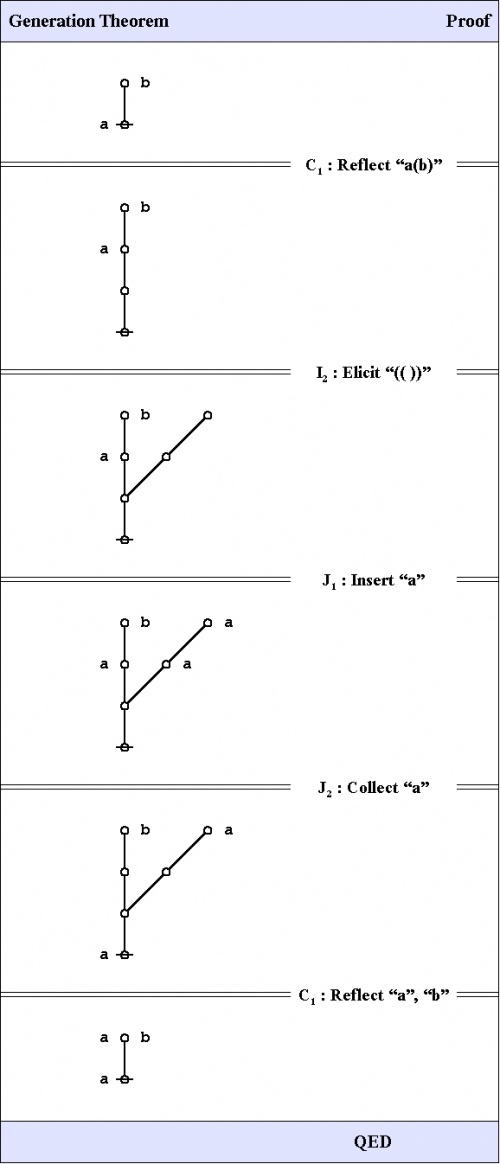

===C<sub>1</sub>. Double negation theorem=== | ===C<sub>1</sub>. Double negation theorem=== | ||

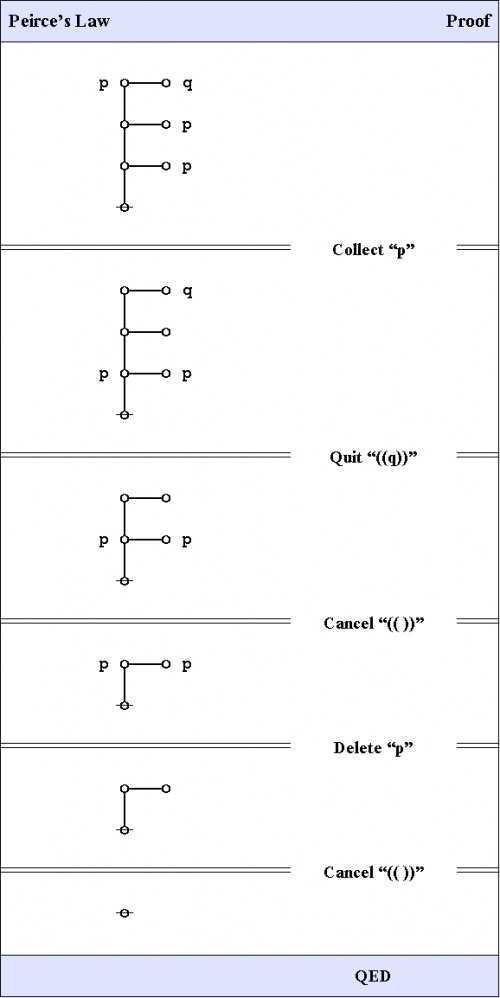

| − | The first theorem goes under the names of ''Consequence 1'' <math>(C_1)\!</math>, the ''double negation theorem'' (DNT), or ''Reflection''. | + | The first theorem goes under the names of ''Consequence 1'' <math>(C_1)~\!</math>, the ''double negation theorem'' (DNT), or ''Reflection''. |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_24.jpg|500px]] |

|} | |} | ||

| Line 622: | Line 676: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_25.jpg|500px]] |

|} | |} | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_26.jpg|500px]] |

|} | |} | ||

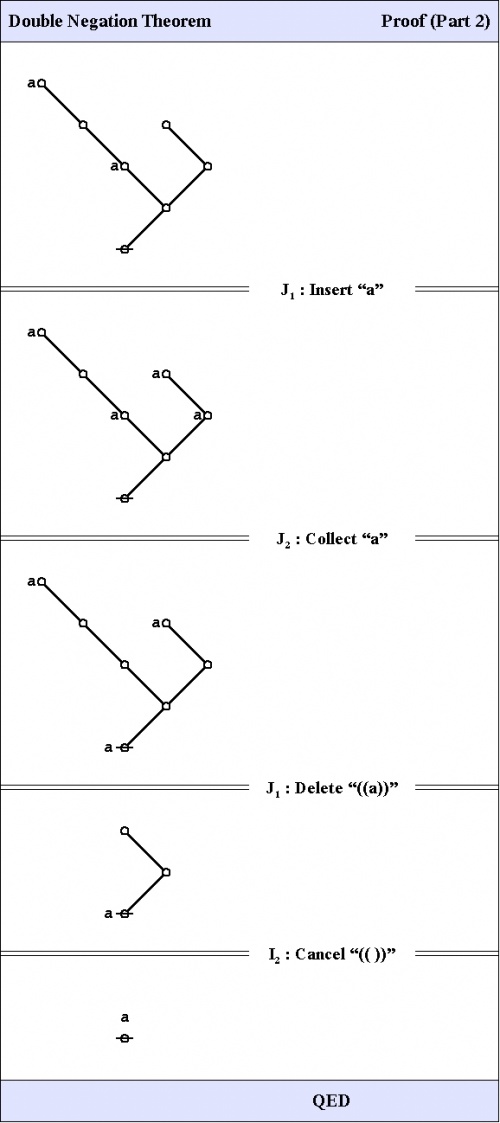

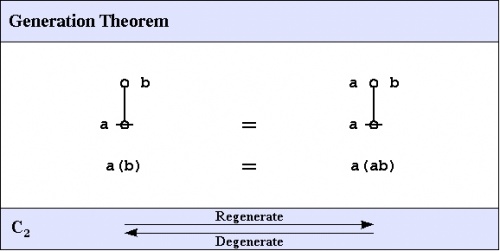

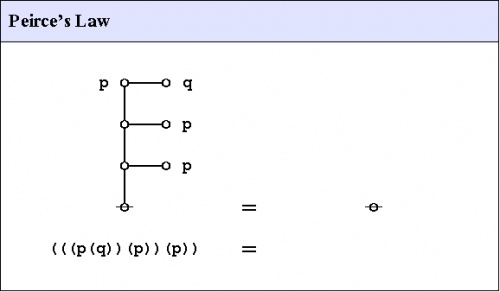

===C<sub>2</sub>. Generation theorem=== | ===C<sub>2</sub>. Generation theorem=== | ||

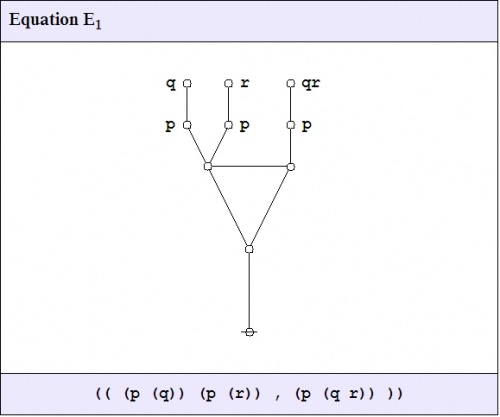

| − | One theorem of frequent use goes under the nickname of the ''weed and seed theorem'' (WAST). The proof is just an exercise in mathematical induction, once a suitable basis is laid down, and it will be left as an exercise for the reader. What the WAST says is that a label can be freely distributed or freely erased anywhere in a subtree whose root is labeled with that label. The second in our list of frequently used theorems is in fact the base case of this weed and seed theorem. In LOF, it goes by the names of ''Consequence 2'' <math>(C_2)\!</math> or ''Generation''. | + | One theorem of frequent use goes under the nickname of the ''weed and seed theorem'' (WAST). The proof is just an exercise in mathematical induction, once a suitable basis is laid down, and it will be left as an exercise for the reader. What the WAST says is that a label can be freely distributed or freely erased anywhere in a subtree whose root is labeled with that label. The second in our list of frequently used theorems is in fact the base case of this weed and seed theorem. In LOF, it goes by the names of ''Consequence 2'' <math>(C_2)~\!</math> or ''Generation''. |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_27.jpg|500px]] |

|} | |} | ||

| Line 640: | Line 694: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_28.jpg|500px]] |

|} | |} | ||

| Line 650: | Line 704: | ||

: In: [http://stderr.org/pipermail/inquiry/2005-October/thread.html#3104 FOLG] | : In: [http://stderr.org/pipermail/inquiry/2005-October/thread.html#3104 FOLG] | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| Line 671: | Line 725: | ||

What sorts of sign relation are implicated in this sign process? For simplicity, let's answer for the existential interpretation. | What sorts of sign relation are implicated in this sign process? For simplicity, let's answer for the existential interpretation. | ||

| − | In | + | In <math>\mathrm{Ex},</math> all four of the listed signs are expressions of Falsity, and, viewed within the special type of semiotic procedure that is being considered here, each sign interprets its predecessor in the sequence. Thus we might begin by drawing up this Table: |

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| Line 694: | Line 748: | ||

Let's take another look at the semiotic sequence associated with a logical evaluation and the corresponding sample of a sign relation that we were looking at last time. | Let's take another look at the semiotic sequence associated with a logical evaluation and the corresponding sample of a sign relation that we were looking at last time. | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| Line 713: | Line 767: | ||

|} | |} | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| Line 732: | Line 786: | ||

The sign of equality "=", interpreted as logical equivalence "⇔", that marked our steps in the process of conducting the evaluation, is evidently intended to denote an equivalence relation, and this is a 2-adic relation that is reflexive, symmetric, and transitive. If we then pass to the reflexive, symmetric, transitive closure of the <''s'', ''i''> pairs that occur in our initial sample, attaching the constant reference to Falsity in the object domain, we will sweep out a more complete selection of the sign relation that inheres in the definition of the primary logical arithmetic. | The sign of equality "=", interpreted as logical equivalence "⇔", that marked our steps in the process of conducting the evaluation, is evidently intended to denote an equivalence relation, and this is a 2-adic relation that is reflexive, symmetric, and transitive. If we then pass to the reflexive, symmetric, transitive closure of the <''s'', ''i''> pairs that occur in our initial sample, attaching the constant reference to Falsity in the object domain, we will sweep out a more complete selection of the sign relation that inheres in the definition of the primary logical arithmetic. | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| Line 795: | Line 849: | ||

Before we leave it for richer coasts — not to say we won't find ourselves returning eternally — let's note one other feature of our randomly chosen microcosm, one I suspect we'll see many echoes of in the macrocosm of our future wanderings. | Before we leave it for richer coasts — not to say we won't find ourselves returning eternally — let's note one other feature of our randomly chosen microcosm, one I suspect we'll see many echoes of in the macrocosm of our future wanderings. | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| Line 818: | Line 872: | ||

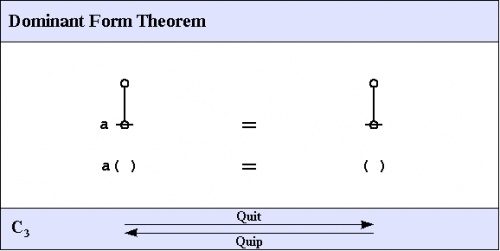

===C<sub>3</sub>. Dominant form theorem=== | ===C<sub>3</sub>. Dominant form theorem=== | ||

| − | The third of the frequently used theorems of service to this survey is one that Spencer-Brown annotates as ''Consequence 3'' <math>(C_3)\!</math> or ''Integration''. A better mnemonic might be ''dominance and recession theorem'' (DART), but perhaps the brevity of ''dominant form theorem'' (DFT) is sufficient reminder of its double-edged role in proofs. | + | The third of the frequently used theorems of service to this survey is one that Spencer-Brown annotates as ''Consequence 3'' <math>(C_3)~\!</math> or ''Integration''. A better mnemonic might be ''dominance and recession theorem'' (DART), but perhaps the brevity of ''dominant form theorem'' (DFT) is sufficient reminder of its double-edged role in proofs. |

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_29.jpg|500px]] |

|} | |} | ||

| Line 827: | Line 881: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_30.jpg|500px]] |

|} | |} | ||

| Line 847: | Line 901: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_31.jpg|500px]] |

|} | |} | ||

| Line 853: | Line 907: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_32.jpg|500px]] |

|} | |} | ||

| Line 877: | Line 931: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_33.jpg|500px]] |

|} | |} | ||

| Line 883: | Line 937: | ||

{| align="center" cellpadding="10" | {| align="center" cellpadding="10" | ||

| − | | [[ | + | | [[File:Logical_Graph_Figure_34.jpg|500px]] |

|} | |} | ||

| Line 892: | Line 946: | ||

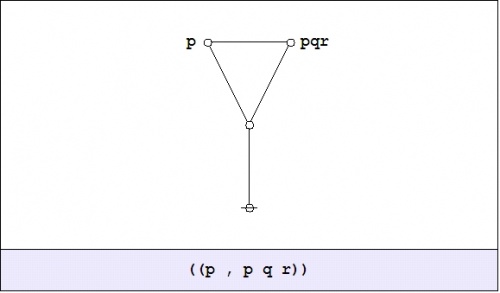

For example, consider the following expression: | For example, consider the following expression: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | a a | |

| − | + | o-----o | |

| − | + | | | |

| − | + | | | |

| − | + | @ | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| Line 907: | Line 961: | ||

We may regard this algebraic expression as a general expression for an infinite set of arithmetic expressions, starting like so: | We may regard this algebraic expression as a general expression for an infinite set of arithmetic expressions, starting like so: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | o o o o o o | |

| − | + | | | \ / \ / | |

| − | + | o o o o o o o o o o o o o o o o | |

| − | + | | | \ / \ / | | \|/ \|/ | | | |

| − | + | o-----o o-----o o-----o o-----o o-----o o-----o | |

| − | + | | | | | | | | |

| − | + | | | | | | | | |

| − | + | @ @ @ @ @ @ | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| Line 925: | Line 979: | ||

Now consider what this says about the following algebraic law: | Now consider what this says about the following algebraic law: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | a a | |

| − | + | o-----o | |

| − | + | | | |

| − | + | | | |

| − | + | @ = @ | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| Line 978: | Line 1,032: | ||

To begin with a concrete case that's as easy as possible, let's examine this extremely simple algebraic expression: | To begin with a concrete case that's as easy as possible, let's examine this extremely simple algebraic expression: | ||

| − | {| align="center" | + | {| align="center" cellpadding="10" |

| | | | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | a | |

| − | + | o | |

| − | + | | | |

| − | + | @ | |

| − | + | ||

</pre> | </pre> | ||

|} | |} | ||

| Line 994: | Line 1,048: | ||